In April 2023, Facebook AI Research released the Segment Anything Model (SAM). This was a milestone in computer vision, being the first computer vision dataset trained on over one billion masks. Besides benefiting from a massive labeled dataset, SAM supports flexible prompting. This means it can make great predictions out of the box with minimal user input, generating object and mask detections from point, multi-point, box, mask, and even text prompts!

Segment-geospatial v0.8.0 is out. New features include segmenting remote sensing imagery with text prompts interactively :star-struck:

— Qiusheng Wu (@giswqs) May 24, 2023

Notebook: https://t.co/Bgc7fiBp7w

GitHub: https://t.co/KUOhmJyArU

Video: https://t.co/lMU03agDjN pic.twitter.com/tMfHBC8HpR

Qiusheng Wu demonstrates how SAM can do a decent job segmenting trees in overhead imagery by just asking the model for "trees".

However, as we highlighted in our previous post, SAM is a two-stage model, and the first stage (the encoding step) is very compute-hungry! To make SAM run fast and build it into our GeoAI solutions, we’re releasing a tool that makes it easy to deploy SAM as a service on GPUs or CPUs—introducing segment-anything-services!

By running the models via a Torchserve container, you can run the expensive GPU encoder on-demand when new imagery becomes available and the less expensive decoder model to produce segments from image embeddings. (Read more about why we like Torchserve). Running the image encoding step on the GPU makes a big difference, a few seconds vs. over a minute, to process a 512x512 single-band image. Moreover, the service can automatically georeference your mask prediction by supplying a Coordinate Reference System (EPSG code) and bounding box coordinates! Check out this georeferenced mask prediction on a Sentinel-2 image, and run the notebook (after following the README) to test on your own GeoTIFF imagery.

If you’d like to learn more about how we’re using SAM, check out this post, where we share our early experimentation with SAM and outline ways we think it will work with geospatial data. Segment Anything Services is one way to enable more people to use SAM and integrate it into their workflows in a way that helps optimize compute costs.

Run SAM on-demand

segment-anything-services allows you to use SAM without owning a GPU. It deploys the SAM model on AWS using AWS Elastic Container Service to use SAM on demand and then spins down the GPU service when it isn’t needed. This is a significant cost-saving measure since the cheapest GPU on AWS that can run SAM, an AWS p3.2xlarge, can cost nearly 3 dollars an hour!

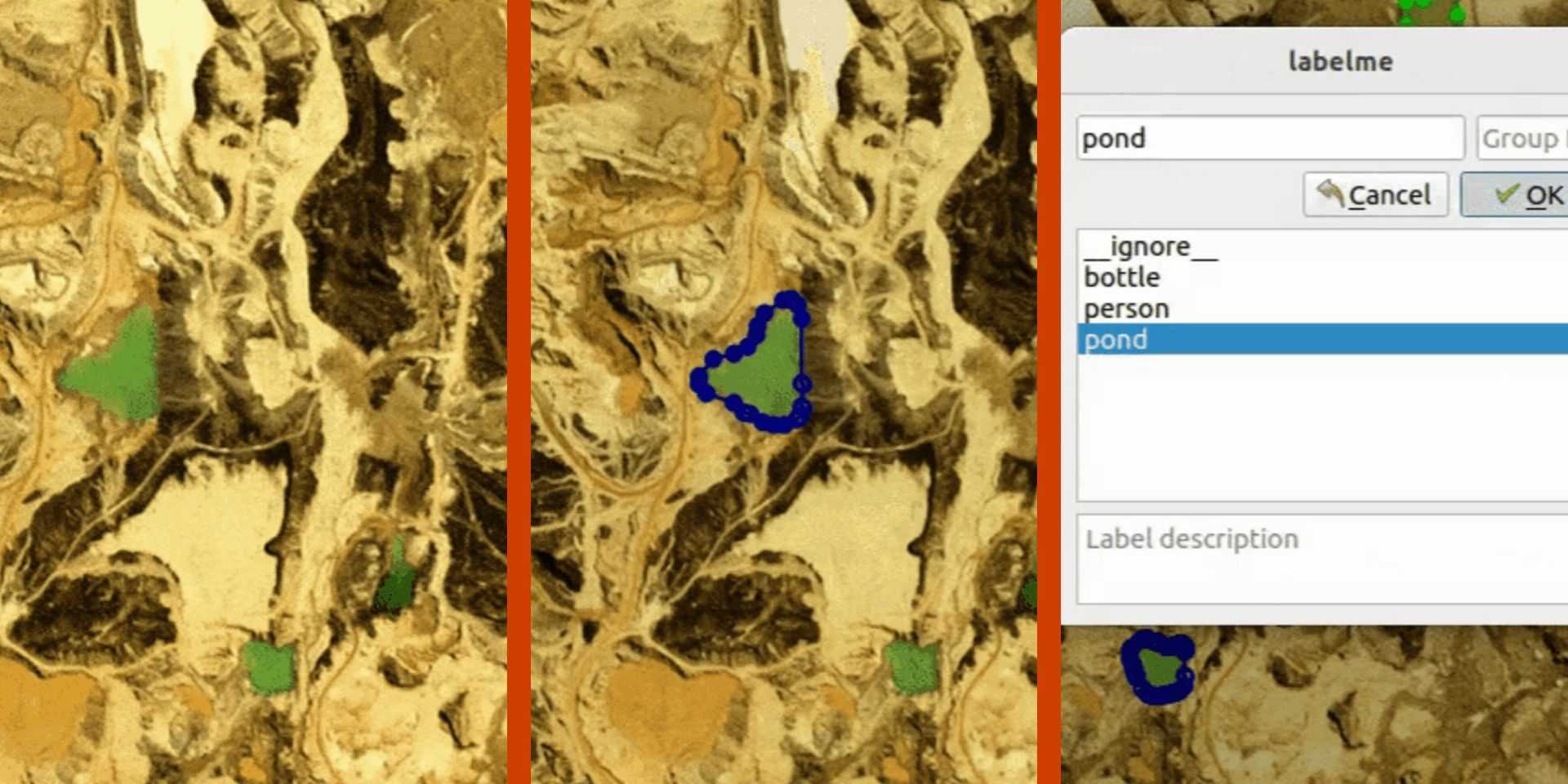

SAM will allow us to make these features available to folks without a GPU or ML model deployment expertise. We’re currently integrating SAM into DS-Annotate, as simple geojson annotation tool, to explore how our partners can benefit from SAM for accurate, automated annotation.

Using Segment Anything Services, more people will be able to utilize SAM and integrate it into their workflows while reducing compute costs.

Back to show some #segmentanything results on SPOT imagery! Here we're testing on 6 meter RGB.

— Ryan Avery (@rybavery) May 16, 2023

Up first is zero shot prompting, where the model makes in predictions with no user input. Overall I'm impressed, but I'm hopeful prompting improves results on roads and small objects. pic.twitter.com/yVoEo7iFoR

A few of the experiments we've been sharing on Twitter as we explore the capabilities of SAM.

In the future, we’ll look to add endpoints for multi-point prompting to produce even more accurate results from more user input. We’ll also investigate if multi-point prompting on a faster, cheaper quantized model produces equivalent results to the original SAM model. Finally, we’ll investigate if a fine-tuned version of SAM using hundreds of pre-existing labels can significantly outperform traditional supervised models for segmentation in a satellite imagery context.

We’re excited to keep building with SAM, so stay tuned for more updates!

What we're doing.

Latest