Time-Traveling Pixels: A Journey into Land Use Modeling

- Estimated

- 7 min read

Our previous blog emphasized the importance of using time series data for land use modeling.

Current State of LULC Models

Recent land use and land cover (LULC) modeling advances come from two approaches. Existing models are adapted for LULC in one approach, whereas in the other approach, model architectures are explicitly designed for LULC.

Significant advances have been made in AI and Deep Learning with the rise of large foundation models. These models have been trained on vast training data and can adapt to various domains and datasets. A great example is the Segment Anything Model, a foundational model for diverse segmentation tasks. We have already started to explore the potential of the Segment Anything Model for GeoAI. Other examples of general image classification models are FCN, UNet, or DeepLab, all of which have backbones pre-trained on Imagenet, a reference dataset of labeled photographic images.

While these foundational models have the potential to solve general segmentation tasks, they are limited to single-image inputs. However, as we have outlined previously, time dimension tends to be crucial for accurate LULC modeling. An exciting area of research in GeoAI focuses specifically on leveraging the time dimension. These models successfully use convolution, recurrent encoders, and self-attention to obtain exceptional results by learning from time series data.

The following table summarizes the pros and cons of the two approaches to GeoAI.

| Model type | Pros | Cons |

|---|---|---|

| Foundational or re-purposed |

|

|

| Time series aware architectures |

|

|

Stepping back to move forward

One major limitation with LULC modeling is the lack of training data. The goals of different LULC models are diverse, often requiring custom training data. Detecting urban areas in northern Africa is a very different challenge from doing the same in western Asia or North America. Consequently, training data must be created from scratch in many practical examples.

While foundational models are built to overcome this limitation, they do not apply to LULC mapping, where time dynamics are important. Specialized models, on the other hand, require large amounts of high-quality training data that is almost impossible to create within reasonable time frames and budget constraints.

One way out of this conundrum is to step back and focus on the goals. If we simplify the models and leverage the most important aspects of the data, we can achieve high-quality results with a lower level of effort. We have experimented with models leveraging the data's multispectral and temporal nature, but without considering the land cover's spatial features.

Spectral and temporal information in satellite imagery stacks can compensate for spatial context. In our experiments, pixel-based models could produce results of the same quality as 2D models, even without exploiting spatial characteristics.

Simplified Training Dataset Creation

Creating training datasets for LULC projects is labor-intensive. Simplifying the labeling process is arguably the most significant advantage of putting spatial context aside. Labelers will not have to understand and trace spatial context in great detail. This makes generating the training dataset fast and straightforward.

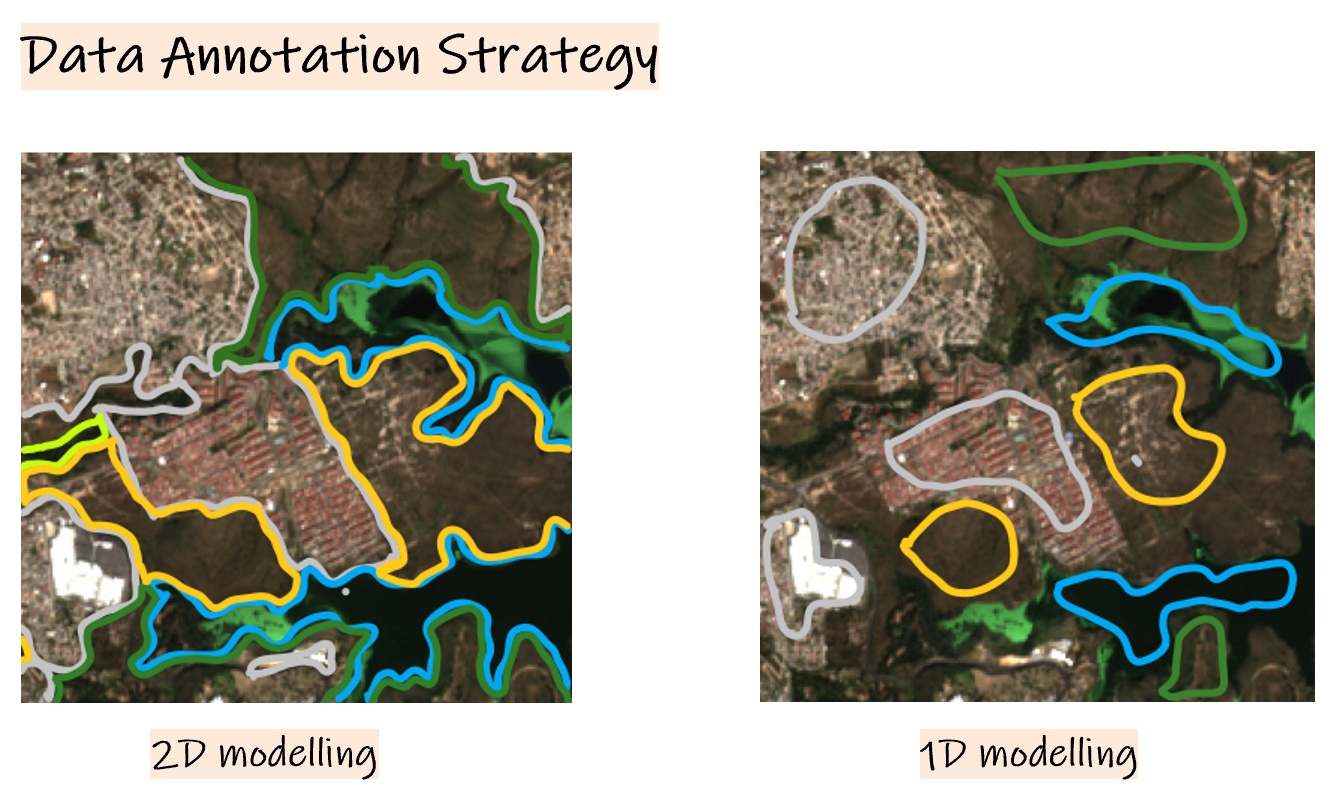

While drawing segmentation masks for 2D mapping, each pixel in the image needs to be annotated (left image in Figure 1). This process can take 5 to 30 minutes, depending on the complexity of the land use classes or landscape.

The annotation approach for 1D models is more straightforward and faster. Annotators only need to label the pixels they are most confident about without annotating every pixel or dealing with complex spatial details (right image in Figure 1). Not having to trace every detail in every feature is a considerable reduction in complexity. This process typically reduces labeling time to between 5 seconds to a minute per image.

Figure 1: 2D vs 1D Annotation

Compact Models

The simplified models are smaller and, therefore, easier to train and deploy. They achieve trainable parameter size reductions of up to 1000x compared to convolutional models. Simplified models also require less data to achieve a good fit. And lastly, training and inference of smaller models is fast even on regular CPU instances. This makes running and using the models much less expensive. This can be a significant advantage in projects that have resource constraints.

Swift Iteration

With the faster turnaround in creating training data and training models, simplified models expedite the iteration process, facilitating efficient model refinement. Constructing an active-learning loop on top of these models becomes effortless.

This is how the modeling cycle could look in practice:

-

Create the first training dataset within hours.

-

Train a model and create the first model predictions.

-

Create additional labels on top of the model output, focusing on active learning and helping the model where it most needs it.

-

Go back to Step 2 and iterate until the model outputs are satisfying.

Forest Type Mapping Test Case

We tested the technique described above in a project where the goal was to differentiate forest types and landscape categories. Using the single-pixel model with a bi-weekly time series over four months and ten spectral bands, we achieved the same quality as a U-Net 2D model.

The visualization in Figure 2 shows some of the pixel-based model outputs and the underlying imagery series for each prediction.

Figure 2: The model is robust to changing landscapes, seasonality & atmospheric effects.

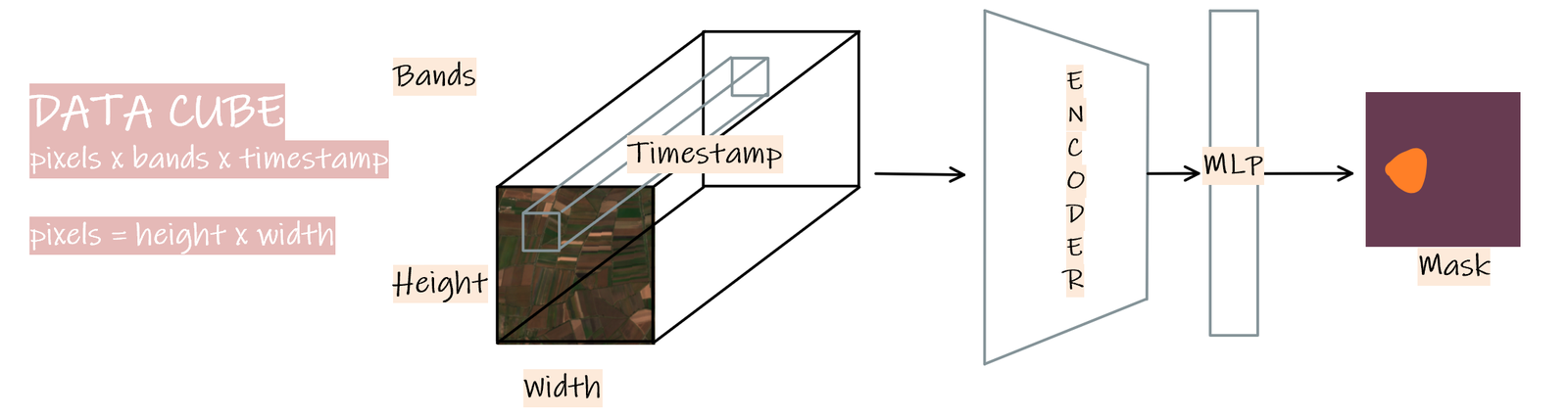

For this example, we developed a small-scale pixel-based model instead of a deep one for LULC mapping. The encoder consists of a 2-layer 1D convolution block with different kernel maps that attend to various properties of the time series data cubes. These extracted features are fed into a Multi-Layer Perceptron (MLP) to generate pixel-level masks.

By adopting a pixel-based model, we also overcame the challenge of edge artifacts commonly encountered in 2D models when working with tiled data.

The high-level model structure is illustrated in the figure below. For more details on how we implemented the model architecture, you can check the following model gist file.

Figure 3: Data Cube model structure

Efficient Imagery Collection

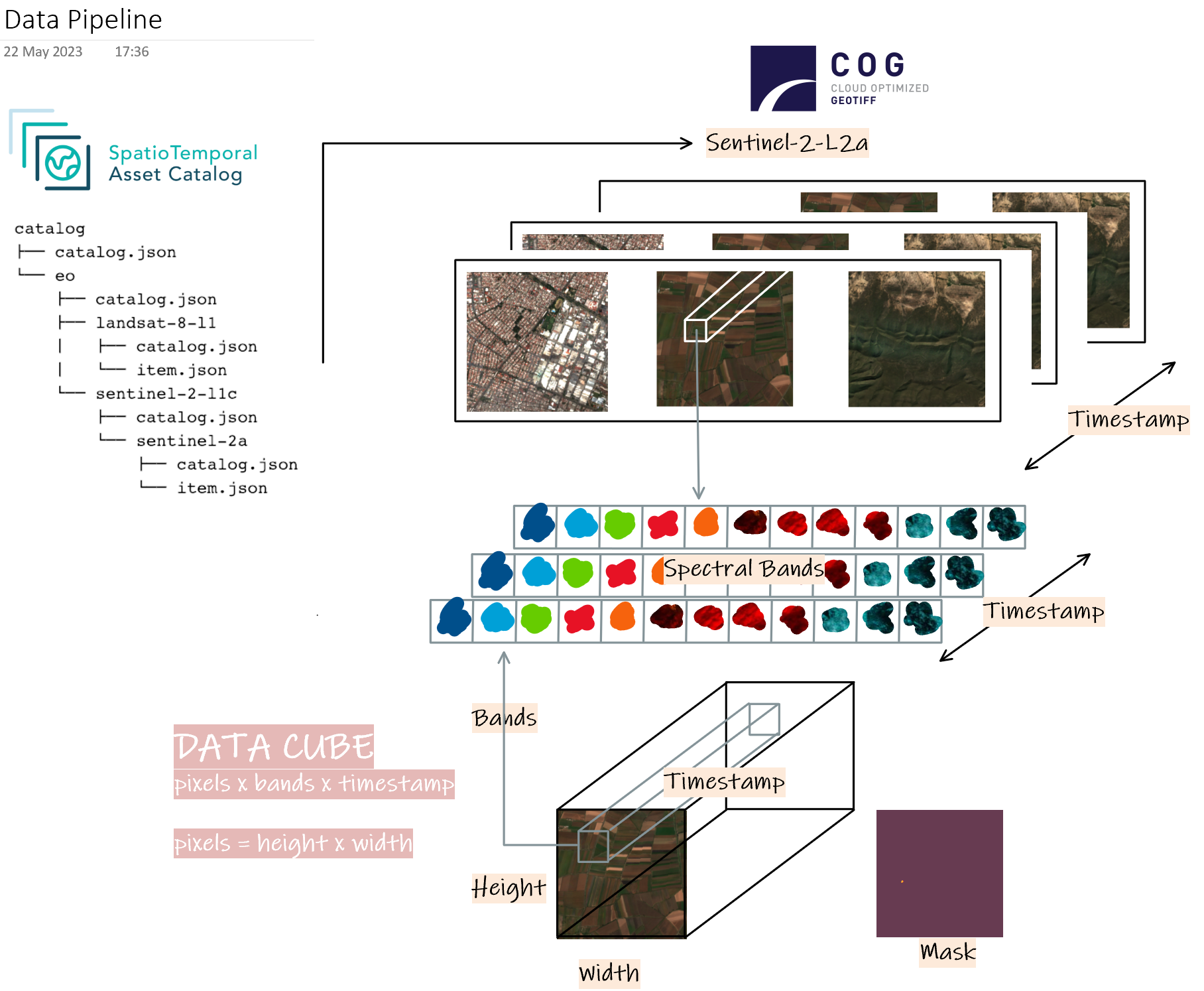

To query datasets efficiently, we utilized SpatioTemporal Asset Catalog (STAC). We took advantage of the Cloud-Optimized GeoTIFF (COG) format, which enables us to perform efficient queries on cloud-based infrastructures.

When querying datasets, we specified our requirements using metadata such as:

- Catalog type (e.g., "sentinel," "landsat," "hls," etc.).

- GeoJSON or bounding box for defining the geographic area of interest.

- Time range (e.g., "Jan 2021 - May 2022") to specify the temporal extent of the data.

For each training sample, we collect a stack of imagery over time and composite the imagery in regular intervals. In the example above, we used 14 days intervals with a simple cloud removal algorithm to reduce clouds where possible.

For model training, we created data cubes using xarray, consisting of pixel composites along the time dimension. These data cubes also included rasterized labels for each land use class. An overview of this pipeline is illustrated in Figure 4.

Figure 4: Data pipeline

Final Thoughts

We have highlighted the critical role of time-series data in land use modeling. The importance of temporal information in accurately mapping land use becomes evident, especially with the growing accessibility of deep temporal archives. Including time series data in land use models enhance their robustness and accuracy.

Moreover, temporal context can be a viable alternative to obtaining spatial 2D context, which can often be challenging. By substituting spatial context with temporal context, creating training data for simpler models becomes faster, more cost-effective, and more efficient. This substitution proves valuable in many practical use cases, demonstrating that temporal context and spectral depth can effectively replace spatial context.

The proposed approach to LULC allows for creating maps under challenging circumstances from scratch without any previously existing training data. If you work in an organization that requires mapping but don't have data available, contact us to evaluate if this type of modeling could be a way forward.

What we're doing.

Latest