The challenge is not collecting data; it is making sense of the billions of images we capture daily. Foundation Models bridge this gap, turning overwhelming data streams into precise, actionable insights about our planet.

Every day, satellites capture millions of images of our planet, creating a flood of information that traditional processing methods struggle to handle. From mapping rainforests to tracking global ecosystem changes, the challenge isn't just collecting data—it's extracting meaningful insights at planetary scale.

That’s where Geospatial Foundation Models (GeoFM) come in. These advanced AI models are breaking through traditional barriers in processing and interpreting Earth's vast data streams, creating dynamic, living models that help us better understand our world and make smarter decisions for the future.

Why Foundation Models Matter

Foundation models are large scale AI models trained on massive datasets. They have transformed the AI landscape, powering applications like ChatGPT, and fundamentally changing how we approach complex data problems. For geospatial applications, these models offer game-changing capabilities.

Geospatial Foundation Models offer a powerful solution for processing Earth's vast data streams. Starting with these pre-trained models eliminates the labor-intensive process of collecting and labeling data from scratch. These models maintain consistent performance across time and space, reducing data drift and providing reliable insights. In Human-in-the-loop (HITL) workflows, GeoFMs provide accurate initial results, allowing analysts to focus on refinement rather than basic labeling.

The true power of GeoFMs lies in their ability to create unique embeddings for every location on Earth. These embeddings serve as a unified foundation for diverse applications—from species classification to disease modeling—providing a comprehensive representation of geographical data that streamlines the deployment of specialized models.

Understanding our planet's complex systems requires more than just processing satellite imagery.

Modern Earth observation integrates diverse data streams: multi-resolution satellite imagery, high-precision drone captures, street-level photography, weather station measurements, and Lidar structural scans. The challenge lies in maintaining conceptual consistency—a tree appears differently in each modality yet represents the same object. Foundation Models bridge this gap by understanding cross-modal relationships, enabling comprehensive Digital Twins that integrate weather patterns, solar dynamics, and Earth's land-water systems.

GeoFMs in Action

There are now numerous GeoFMs available, each optimized for different data sources, resolutions, and modeling techniques. These models generate rich embeddings that enable three main types of applications:

- Semantic Understanding: Embeddings capture location context and patterns without raw data processing, enabling researchers to use geospatial information as a powerful auxiliary input.

- Similarity Search: Embeddings enable rapid, precise identification of regions with matching characteristics—from vegetation patterns to urban layouts—across massive datasets.

- Agile Modeling: Pre-trained embeddings accelerate the development of simple classifiers and complex pipelines for segmentation, object detection, and change analysis.

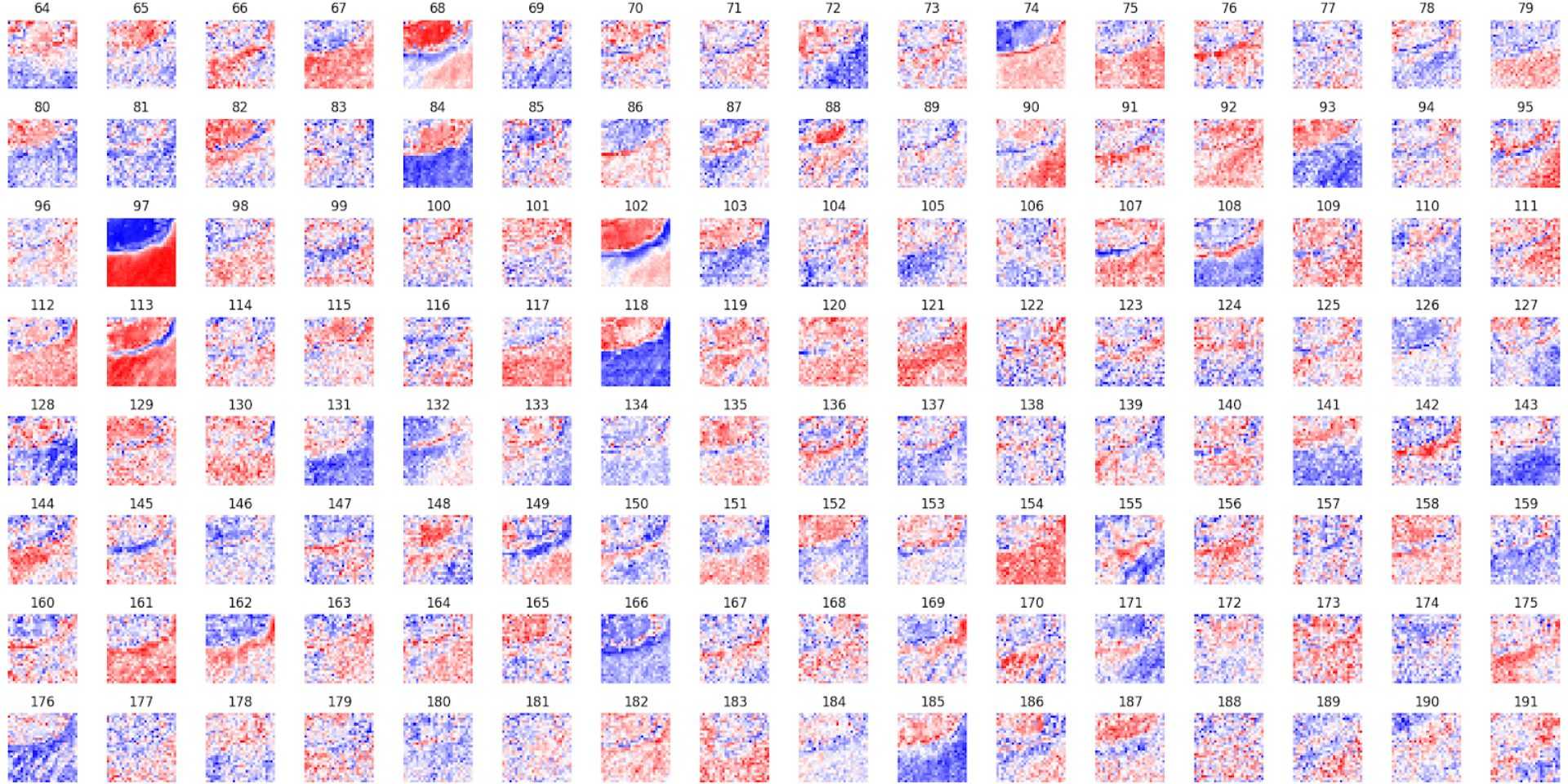

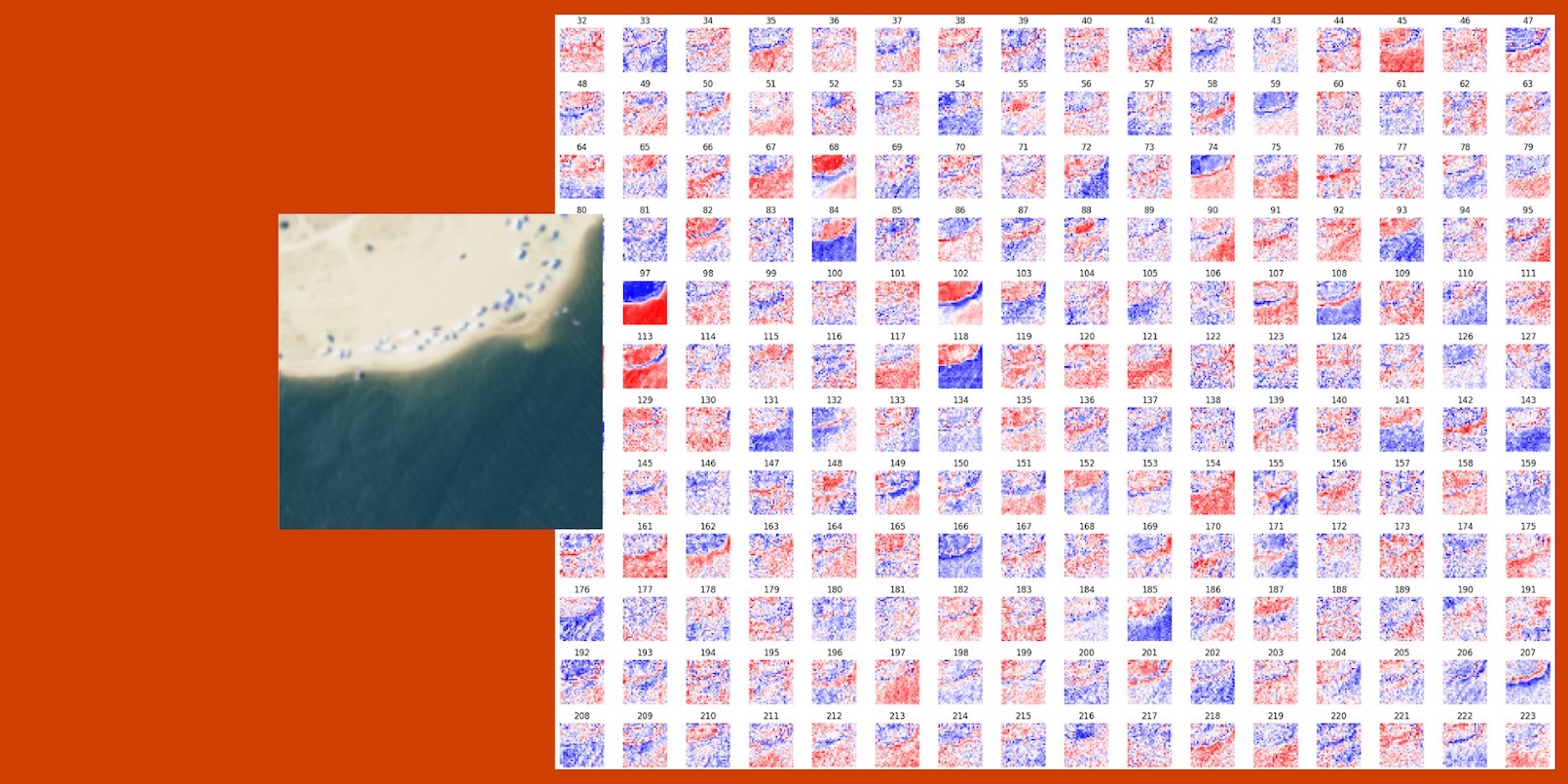

Visualizing the embedding space of Clay foundation model for a tile.

GeoFMs come with important technical constraints and opportunities. While GeoFMs pre-trained with Masked Autoencoders (MAE) excel at capturing global patterns, they face specific challenges with pixel-level tasks. Transformer architectures reduce feature resolution 4-5 times, sacrificing fine-grained spatial details needed for precise segmentation or change detection. Without additional upscaling techniques or custom decoders, mapping features back to exact pixel locations remains a key limitation.

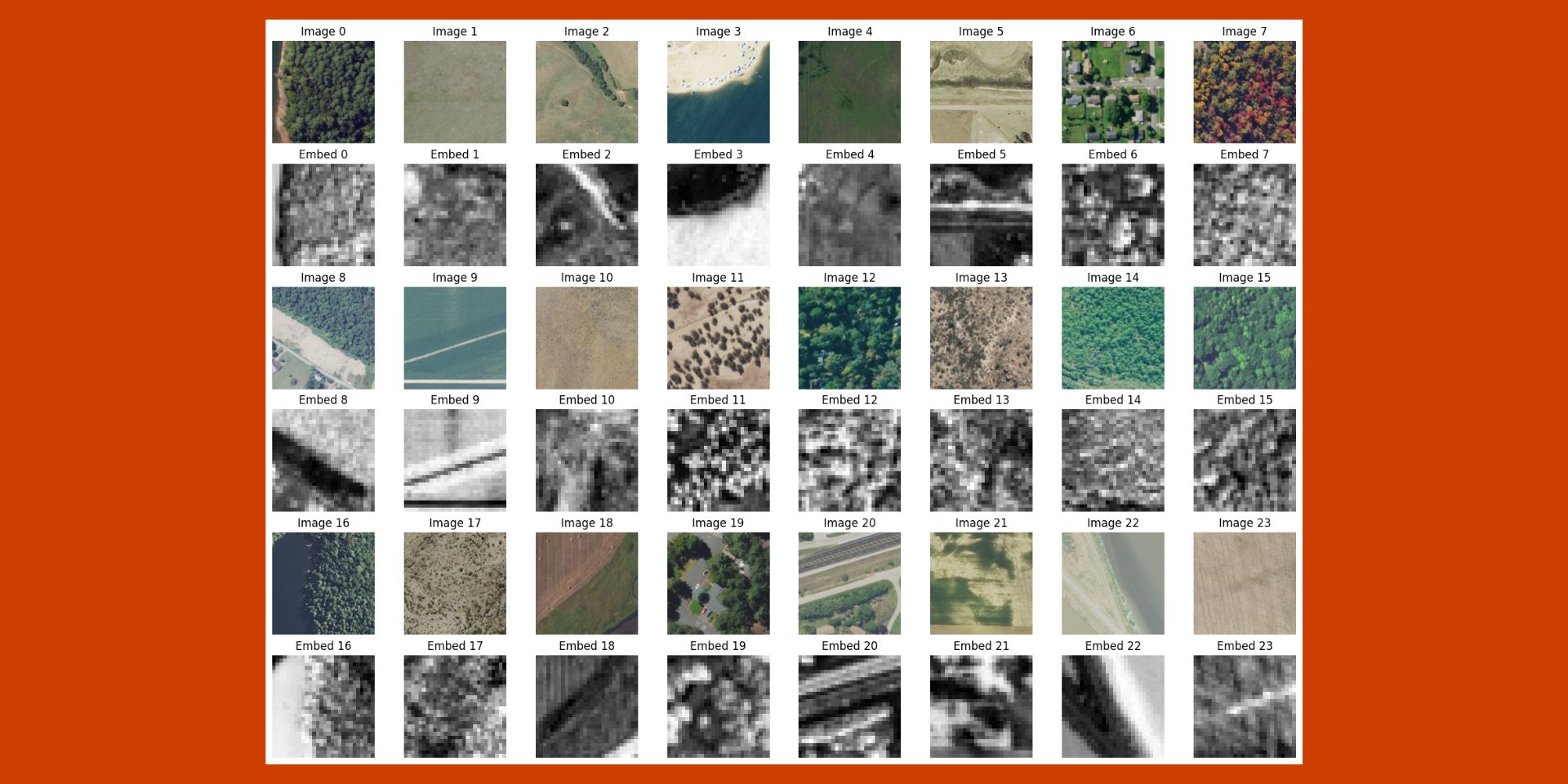

Successful implementation starts with visualizing embeddings. This critical step reveals how well the model captures relevant features, understands spatial relationships, and integrates multiple data sources—providing clear signals about model performance.

Visualizing different tiles and their embeddings. It is possible to see features like roads, forests, trees, ocean, etc. and quickly evaluate how well they are segmented at a lower resolution.

When embedding visualizations show a good amount of confidence, adapters offer the next step forward. These compact, trainable layers inject task-specific information without full model retraining, enabling rapid and resource-efficient fine-tuning for specialized tasks like segmentation and change detection.

Model Selection Guide

We developed a quick reference table below for anyone wanting to select a GeoFM for their ML task. Monitoring coastal erosion, detecting land use change, monitoring crops over time — there’s a model for that.

| Model | Datasets | Model Size (Params in millions) | Training Technique | Key Strengths | Geography | Data Timeline | License |

|---|---|---|---|---|---|---|---|

| Prithvi | Harmonized Landsat-Sentinel (HLS) | ~300 | MAE | Temporal segmentation, change detection | Primarily U.S. | 2015 - 2021 | Apache 2.0 |

| Presto | Sentinel-1, Sentinel-2, ERA5, DEM | ~25 | Pixel Time series | Time series analysis, computational efficiency | Global, focus on ecoregions | 2020 - 2021 | MIT |

| SatMAE | fMoW RGB, fMOW Sentinel-2 | ~300 | MAE + Spectral & Temporal Encodings | Multispectral representation | Global, with a focus on multispectral data | 2017 - 2021 | Attribution-NonCommercial 4.0 |

| ScaleMAE | Multi-resolution datasets | ~300 | Scale-aware MAE | Multiscale representation | Global, with variable spatial and temporal resolution | 2018 - 2022 | Attribution-NonCommercial 4.0 |

| DOFA | Sentinel-1, Sentinel-2, NAIP, EnMAP,MODIS | ~350 | MAE + Dynamic Weights | Multisensor Representation | Global, trained on multimodal data | 2016 - 2022 | MIT |

| Clay | Sentinel-1, Sentinel-2, Landsat 8 & 9, NAIP, Linz | ~500 | MAE + Dynamic Weights + MRL | Multisensor Representation | Global, trained on multimodal data | 2015 - 2023 | Apache 2.0 |

Building for Tomorrow

Foundation Models are fundamentally changing how we understand and respond to global challenges with unprecedented precision and scale.

The convergence of Large Language Models (LLMs) with GeoFMs marks the next leap, promising to make geospatial insights more accessible and actionable. This integration opens new possibilities for interpreting and applying Earth observation data at scale.

Our work on models like Clay and Prithvi continues to push these boundaries. Through collaboration with the broader geospatial ML community, we're establishing the standards and best practices that will shape the future of Earth observation.

The future of Earth observation is here, and it's powered by Foundation Models.

What we're doing.

Latest