By: Zhuangfang NaNa Yi, Howard Frederick, Ruben Lopez Mendoza, Ryan Avery, Lane Goodman

Rapid survey methods with AI in African savannahs can provide up-to-date information for conservation

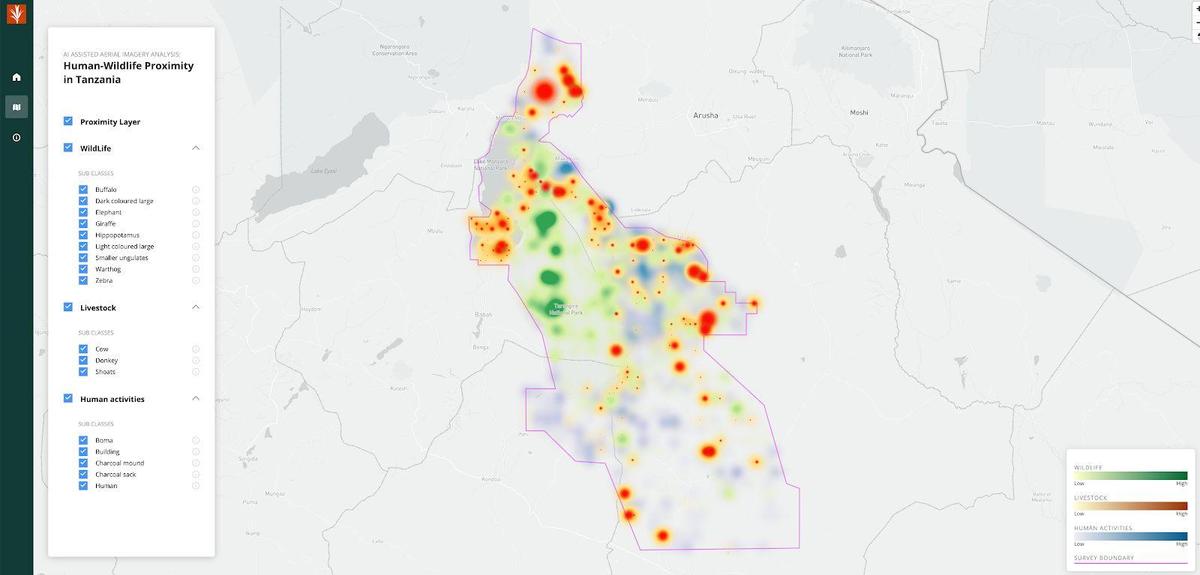

Mapping wildlife and human distributions are critical to mitigating human and wildlife conflict and to conserving wildlife, habitat, and human wellbeing. Aerial surveys are a critical tool, but new methods for these surveys generate massive image archives. The act of scanning these images is time-consuming and difficult, leading to significant delays, in converting data into action. To address this Development Seed and the Tanzania Wildlife Research Institute (TAWIRI), developed an AI-assisted methodology that significantly increases the speed of spotting and counting wildlife, human activities, and livestock after aerial surveys (explore the map and project virtualization portal here). We’ve developed an AI-assisted survey method to map wildlife and human distributions and to detect potential conflict areas between wildlife and the human-associated activity for Tanzania. This post provides an overview of our approach; more details are available in our technical report.

Why AI

Wildlife conservation is in a race against human expansion worldwide. The expansion of settlements and agricultural lands coupled with a three percent population growth annually in sub-Saharan Africa makes it difficult to protect wildlife and its habitat. The proximity of humans and wildlife has the potential for conflicts through competition for resources and space. For the humans that live and work in close proximity to wildlife, wildlife activity can lead to loss of income, property, and sometimes human lives. At the same time, wildlife corridors are diminishing rapidly in many parts of Africa due to this competition.

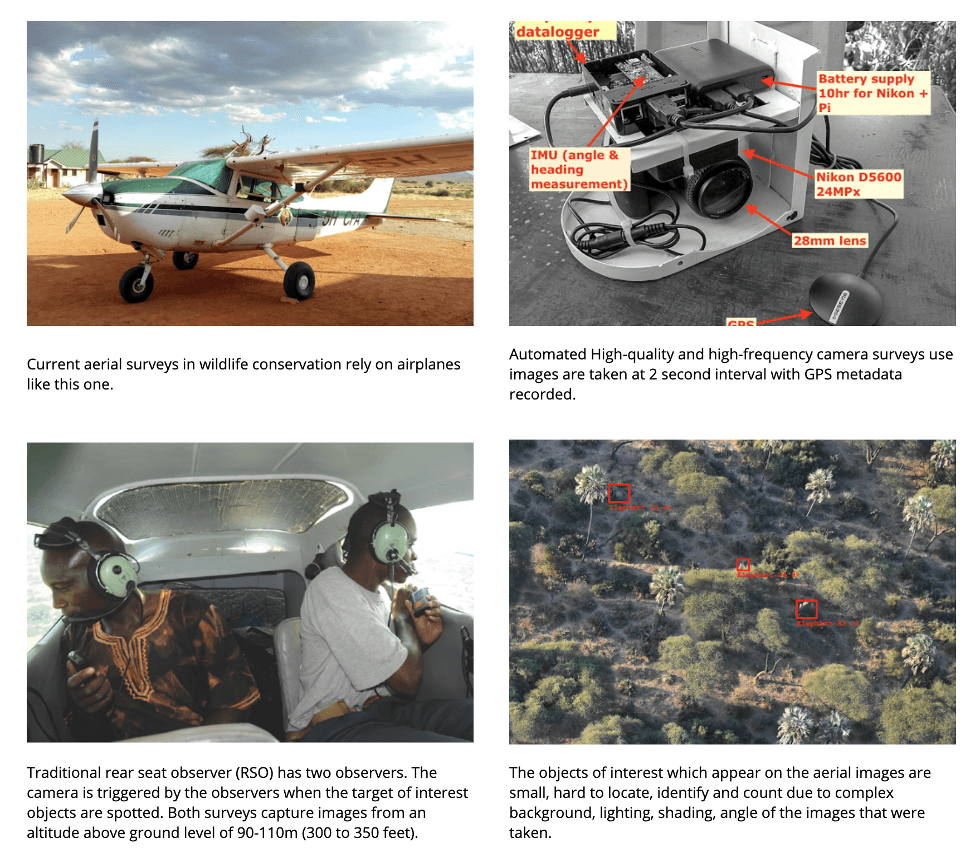

Survey systems that map human and wildlife distributions inform conservation practitioners, policy-makers, and local residents, thus contributing to wildlife conservation policies that can mitigate potential conflicts. Creating an early warning system requires frequent monitoring and tracking of human, wildlife, and livestock activities and movements. Currently, one way this is achieved is through aerial surveys. This project utilized two different types of image capture from aerial censuses: traditional Rear Seat Observer (RSO) censuses (using human-eye detection) and photographic aerial surveys (PAS). Traditional RSO survey raises concerns due to the difficulty of finding well-trained RSO’s and their ability to get fatigued on long missions. RSO’s performance is also less precise and reliable compared to human annotators who are trained to track and count wildlife from taken images. RSO typically happens on three to five-year intervals due to high logistical costs and the difficulty in fielding logistics, flight crew, fieldwork, and analysis teams in remote locations. New survey methods, e.g. PAS, with automated camera systems, speed up detection and decrease implementation costs, but produce tens of thousands of images and require intensive labor to sort through images in the lab.

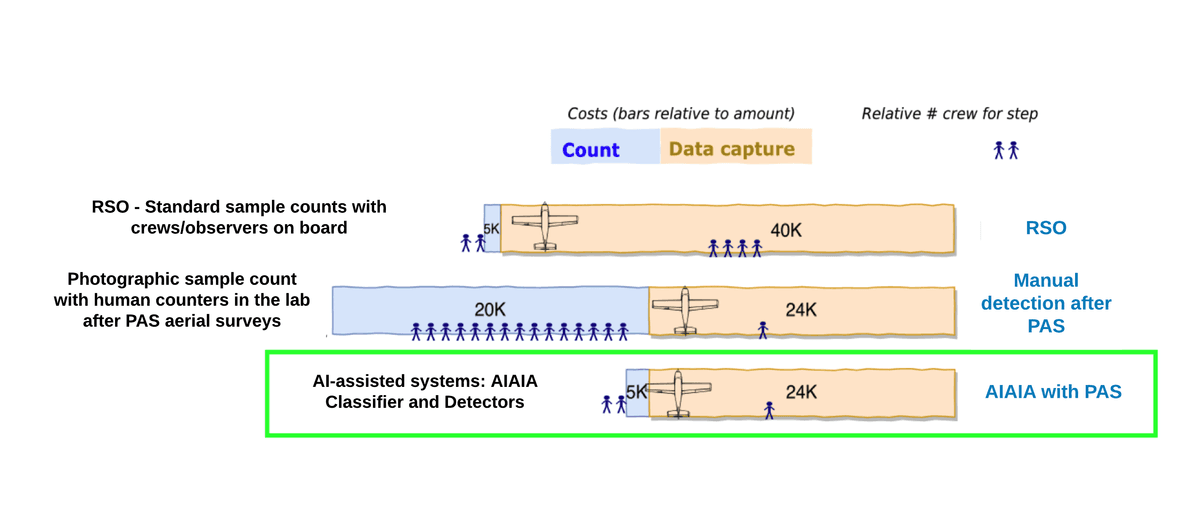

Our AI-assisted Aerial Imagery Analysis (AIAIA) introduces a workflow that: 1) can speed up the processing of wildlife counts and mapping human influences in wildlife conservation areas up to 60%; 2) it has the potential to reduce the implementation costs of counting by up to 20%, which will enable more frequent monitoring. Once the training data quality and model performance of AI-assisted workflow mature and stabilize, we foresee the hours spent on getting accurate human-wildlife proximity maps (explore the map) would only take 19% of current human manual workflow, and potentially reduce the cost of identifying and counting objects over 81,000 aerial images from $20,000 to less than $5,000 (See the following graph).

We outline three combinations of how wildlife, livestock, and human activities are detected, identified, and counted after aerial surveys for an example survey zone: 1) The RSO count might cost $40,000 per survey, though the object of interest count costs as little as $5,000 since observers on the plane would count the objects during the survey without following lab data annotation after the survey; 2) Photographic aerial surveys are cheaper to fly and cost less for data capture but require an order-of-magnitude improvement in photographic review times. 3) AI-assisted systems, e.g. AIAIA Classifier and Detectors can speed up and reduce costs of processing wildlife, human, and livestock activities, counts, and mapping human influences in wildlife conservation areas after PAS aerial surveys. The cost reduction and count speed improvements make a photographic AIAIA workflow very attractive in the long run of wildlife conservation.

Further, we’ve automated the process of publishing this information, putting it into the hands of researchers and policymakers more quickly.

|  |

|---|---|

| A. The AIAIA Classifier acts as a scanner and filters over the aerial images will keep the “windows” that have objects of interest, and then AIAIA Detectors predict what class(es) of livestock, wildlife, or human-activities presented in the “windows." | B. The visualization portal shows the ML model outputs after human validation. The layers include human-wildlife proximity (HWP), wildlife, livestock, and human activities. HWP indicates the spatial overlap among layers, and that could potentially develop indirect conflicts in the long run. |

Technical Approach

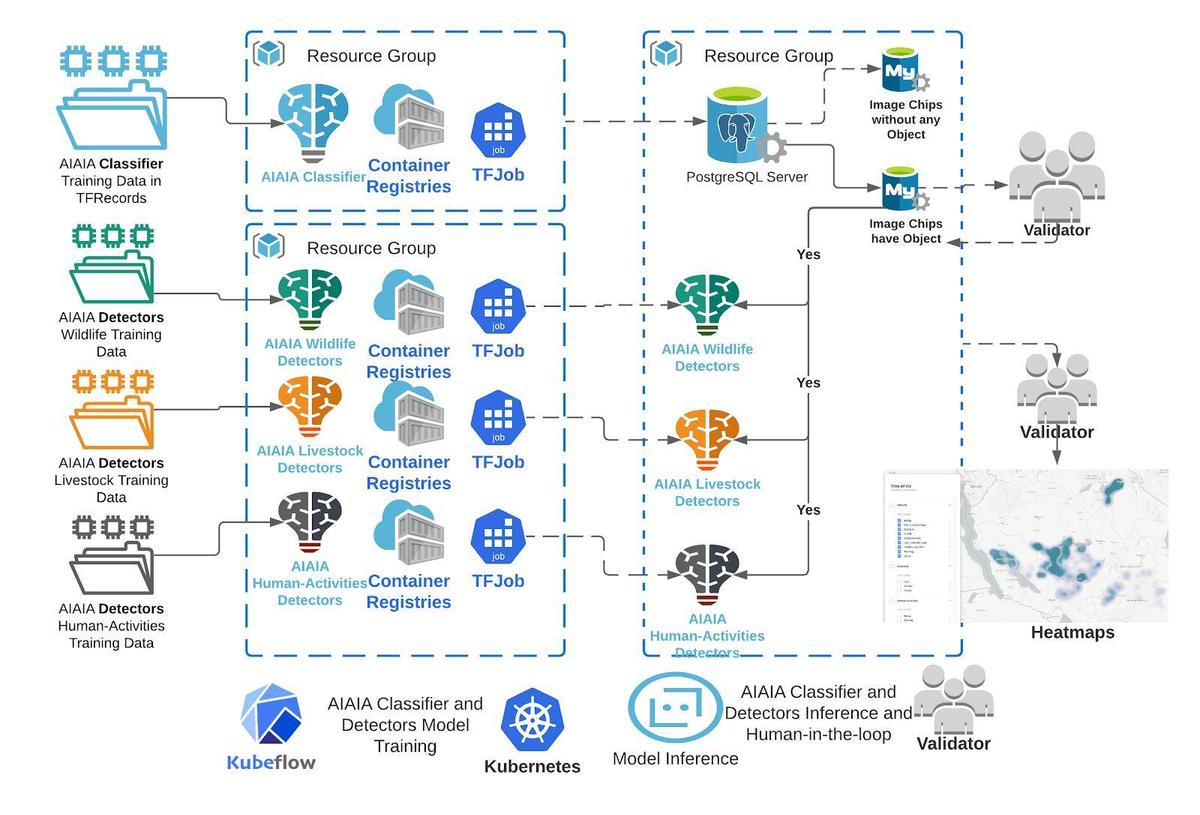

Automated camera systems generate massive amounts of images especially PAS, many of which (as much as 98%) don’t contain any wildlife or human settlement. Our approach uses a two-step process to quickly review massive amounts of imagery and to minimize processing costs. First, we use an AI-based image classifier, AIAIA Classifier, to filter tens of thousands of aerial images to just those images that contain an object of interest. We then pass the remaining images through a set of three object detection models, AIAIA Detectors, which locate, classify and count objects of interest within those images. The detected objects were assigned to image IDs that have their unique geolocation recorded during the aerial surveys. These geocoded detections were then used to generate maps of the distribution of wildlife, human activities, and livestock, with a visualization of mapped proximity highlighting potential conflict areas. Explore an example map from our visualization portal.

Specifically, the image classifier, AIAIA Classifier, filters an image containing our objects of interest, either human activities, wildlife, or livestock. Each object detector model, AIAIA Detectors, separately locates either wildlife and livestock at the species level or human activity. The model training sessions were deployed with Kubeflow on Kubernetes Service with GPU instances. The sessions were also tracked by TFJob for hyperparameter tuning and search experiments. Such model training is monitored by Tensorboard so we can watch model performance over the validation dataset. The best performing models were selected and containerized as TFServing images, including the classifier and detectors (called “aiaia-fastrrcnn”) that hosted on Development Seed’ DockerHub for our scalable model inferences, see TFServing images here. Our AIAIA classifier processed 12,000 image chips (400x400 pixels) per minute, and we were able to process 5.5 million images under 8 hours. The AIAIA detectors each processed 172 images per minute on a K80 GPU machine.

Two AIAIA workflows were developed in the study. The AIAIA Classifier, a binary image classifier, acts as the filter to keep only image chips that contain “object of interests”. The AIAIA Detectors (wildlife, human-activities, and livestock detectors) detect and count these objects of interest in images. Detected objects and counts were served to our MVP for flagging potential human-wildlife conflicts on the ground for wildlife conservation communities and policy-makers.

Training Data and Quality Improvement

We relied on images that were captured during RSO censuses as training data for machine learning model development, and model inferences over images that were captured by PAS. The Tanzania Wildlife Research Institute provided annotators (wildlife domain experts) at a small annotation lab in Arusha, northern Tanzania, to process a database of images from RSO survey counts in Tanzania collected by TAWIRI in the past decade. Labels were annotated by a group of volunteers. Around 7,000 RSO images were labeled that were passed to the Development Seed machine learning engineers for model development. The ultimate goal of labeling is to use the PAS dataset that is a higher spatial resolution with geo-reference to develop a model that could identify features (inference) over images.

While it is promising to use AI-assisted imagery analysis, however, AI-assisted workflow is still not perfect. The quality of outputs heavily depends on the quality of the training dataset we supply to the models. In our case, the annotation task was relatively new to all the volunteer annotators, and our aerial images were challenging to annotate for a variety of reasons, e.g. the objects of interest are small, hard to identify because the lighting, angle of the shots, the body size and colors of objects. We observed the following quality issues in the training data:

- Missing labels. Some wildlife, livestock, or human activities were not annotated in images, particularly when the condition of the image was blurry, situated in a complex landscape, or contained many objects.

- Label duplication. Some objects were annotated twice or more, leading to further class imbalance as well as less accurate validation and test metrics due to an increase in false negatives from missed detection of the duplicate label.

- Mislabeled. Particularly for the wildlife categories and livestock, many instances were mislabeled as different classes. We also include mislabeled classes when multiple objects are mixed under one class.

Each of these problem types varied in degree depending on the difficulty of the class that was being annotated. These issues were overall consistently present in the training, validation and test datasets. This made the AIAIA Detectors more difficult to accurately train and caused the evaluation metrics to be less robust since many ground truth labels were incorrect. We discuss these issues in detail in the main report and how the quality can be improved, see section “Results and Discussion.”

Results

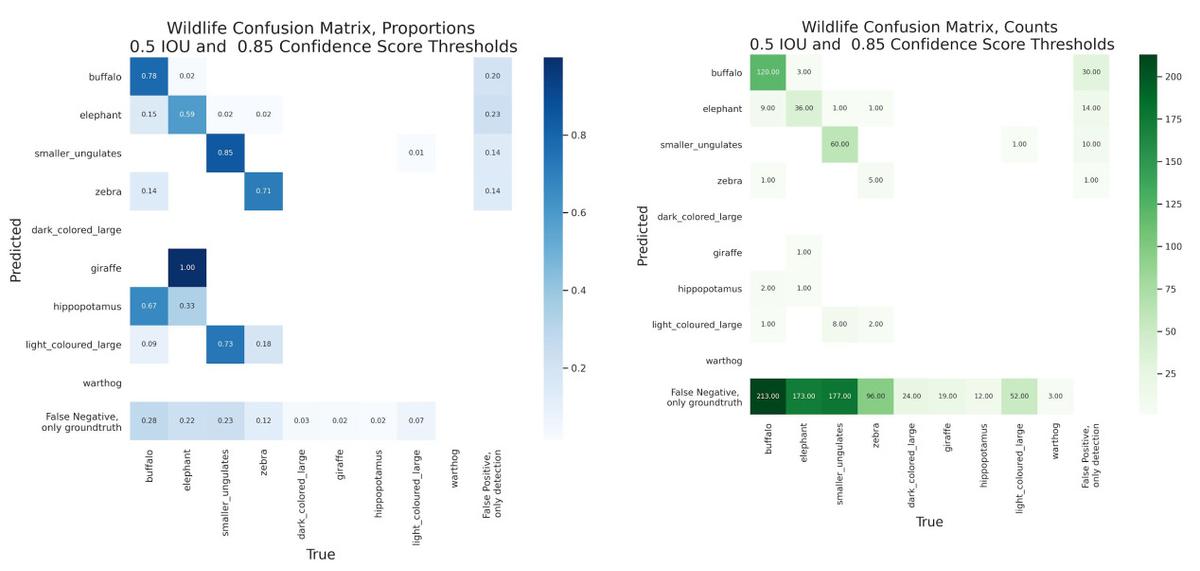

Even with training data quality issues, our classifier model, AIAIA Classifier, achieved > 0.84 F1 score with the test dataset. The best performing classes for the wildlife, livestock, and human activities detectors, AIAIA Detectors, were buffalo (.48 F1 ), cow (.29 F1 ), and building (.49 F1), with each class having higher precision than recall. Other categories had high performance in terms of precision (meaningless false positives), while recall (the ratio of true positives to all ground truth) suffered, including the following: elephant (.59 precision, .17 recall), smaller ungulate (.85 precision, .24 recall), and shoats (.69 precision, .14 recall). This high precision shows that our model is capable of correct, high confidence predictions and the recall metrics show that it has trouble with separating all ground truth from the background. Low recall scores generally imply poor training dataset quality. We expect that addressing training data quality issues and either discarding classes with low amounts of samples or increasing the number of samples will substantially improve both recall and precision for our object detection models.

Proportion Confusion Matrix for the AIAIA Wildlife Detector, with values normalized by row totals, and the Count Confusion Matrix, where a “1” indicates that 1 object was predicted in the row class and was annotated with a column class.

Toward AI-assisted wildlife detection

The results from AIAIA detectors were re-imported back to the Computer Vision Annotation Tool (CVAT) tool for human-in-the-loop validation before the detected wildlife, livestock, and human activities were geocoded for human-wildlife proximity map generation. Currently, the server of the CVAT tool is set up on Azure. CVAT is a free, interactive image annotation tool for computer vision tasks that we used to create original training datasets for our AI model development. For model results validation, the tasks are split by multiple jobs to allow multiple users to log in and synchronically work in parallel on CVAT.

Human annotator validate AIAIA Detectors model outputs with CVAT that's hosted on Azure

The future AI-assisted workflow will highly benefit from having a human-in-the-loop approach to improve training data quality by helping annotators to only annotate images with objects in them, annotate difficult classes, and fix incorrect ground truth. We proposed the future AI-assisted workflow should bring humans into the loop in a three-phase workflow: 1) training dataset visual inspection and validation before the AIAIA Classifier model training; 2) AIAIA Classifier model output inspection before the filtered images are passed to the AIAIA object detectors; 3) manual output inspection, validation, evaluation and correction before the detected, identified and counted objects are aggregated for the minimum viable product (MVP) visualization to produce proximity and risk maps (see above AIAIA workflow diagram). Improving training data quality is critical for our AI-assisted workflow.

The human-wildlife proximity (in red) in Tanzania is computed and generated when wildlife v.s. livestock and (or) human activities. In our current survey area, such proximity is mainly caused by livestock especially outside of Tarangire National Park, Tanzania. Explore the map.

This project was funded by Microsoft AI for Earth and Global Wildlife Conservation.

IN PARTNERSHIP WITH

Special thanks to Habari Node Limited in Arusha for their invaluable help in getting annotation data into the cloud.

Read more of our methodology from the technical report.

Project Public GitHub Repository: https://github.com/TZCRC/AIAIA

CONTACTS

Development Seed

General Information: info@developmentseed.org

Machine Learning Lead: nana@developmentseed.org

TAWIRI

Director General: barua@tawiri.or.tz

Conservation Information Monitoring Unit: cimu@tawiri.or.tz

Tanzania Conservation Resource Centre

TZCRC Director: director@tzcrc.org

What we're doing.

Latest