Finding vulnerable housing in street view images using AI to create safer cities

- Estimated

- 7 min read

This is an ongoing project to support the World Bank’s Global Program on Resilient Housing.

Hard to find, easy to fix

People living in neighborhoods with poor building standards are more likely to be killed by a disaster. Their homes, too often built in a cheap or makeshift manner, are susceptible to dangerous events like earthquakes, hurricanes, and landslides. These (typically poor) inhabitants make up a disproportionate number of the 1,300,000 lives taken by disasters in the last 25 years. Families move into poor urban communities seeking better jobs and opportunities but don’t possess the money or technical knowledge to access safe, resilient housing. Governments and communities strive to retrofit these structures for safety — usually a simple, cheap, and effective process — but dangerous housing remains a dilemma. Fixing these buildings isn’t the issue, it’s finding them.

Identifying vulnerable housing is tedious. Currently, teams of trained experts must pace neighborhoods with clipboards to scrutinize building resilience (e.g., looking for construction materials, integrity of the design, approximate age, completeness of construction, etc.). This manual strategy can take a month or longer and is prone to human error, which drains valuable resources from the retrofitting process. The challenge is bringing the expertise of a structural engineer to every corner of a city in a way that’s accurate, fast, and repeatable.

Development Seed is supporting the World Bank to identify dangerous housing through the use of artificial intelligence. In this approach, the World Bank collects images in at-risk urban neighborhoods using vehicles outfitted with a multi-camera platform (similar to Google’s street view cars). A machine learning (ML) pipeline then ingests these street view images and autonomously identifies risky structures. Finally, this information is organized and displayed in an online map for decision makers to help them direct retrofitting efforts. Instead of teams of engineers, this approach only requires a driver to weave through a neighborhood with a car-mounted camera. We aim to empower governments and local communities with this technology (especially those affected by climate change); they understand that increasing resilience is important but lack knowledge on where or how to act. Insights from this project will help communities find and protect infrastructure before a disaster rather than rebuild it after.

Automating for speed

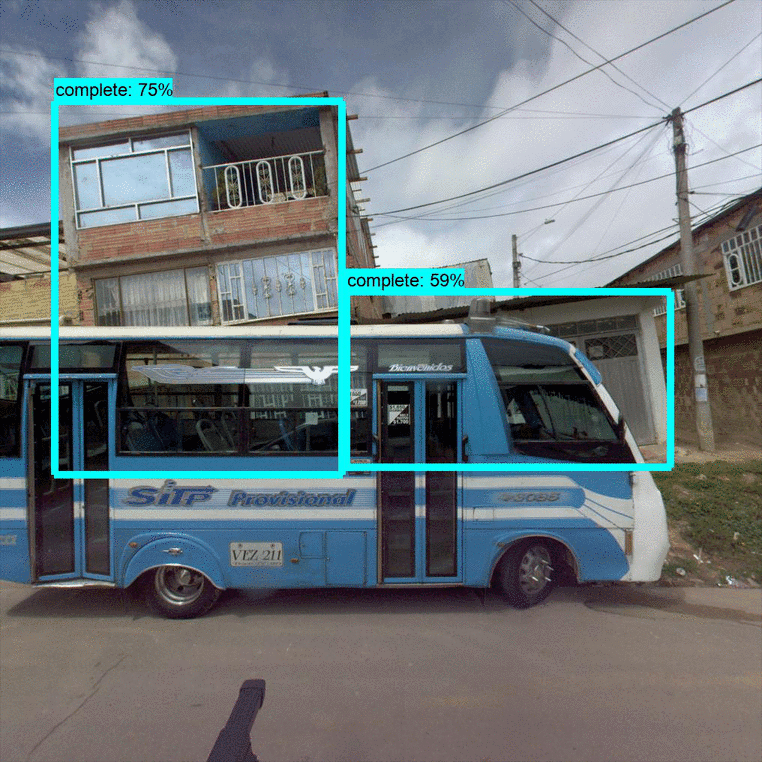

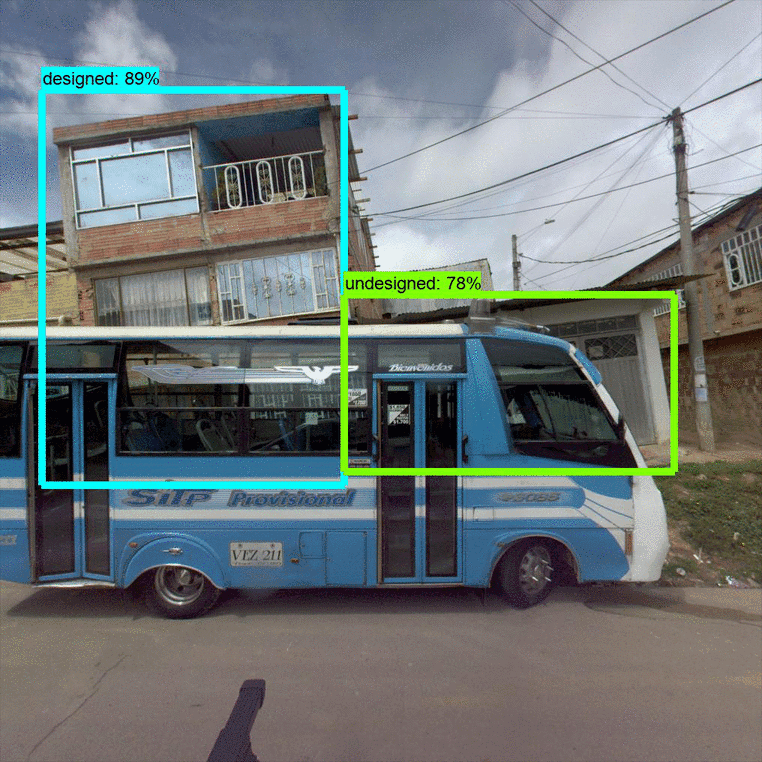

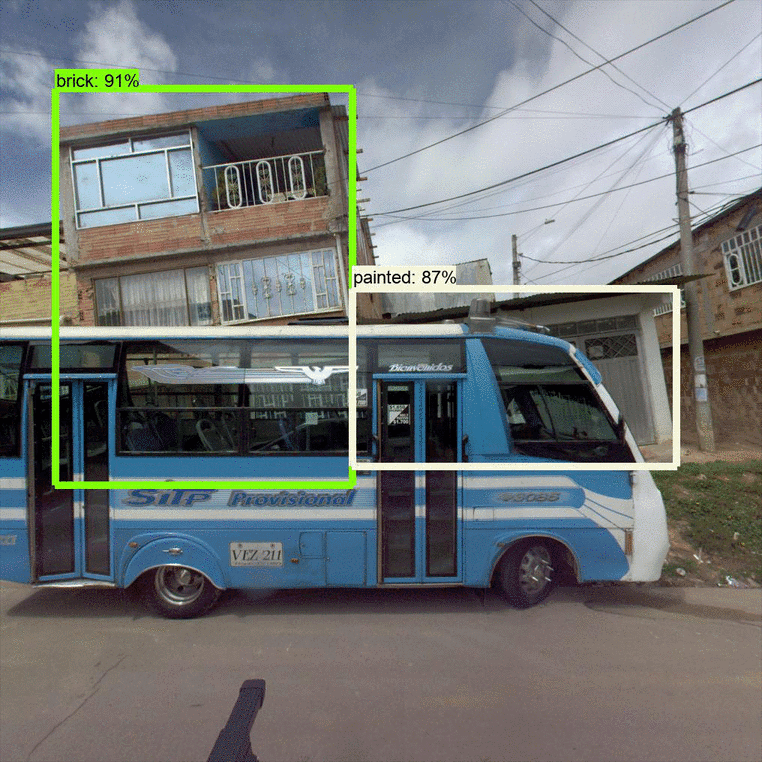

Retrofitting vulnerable buildings starts with finding them. Our automated “Housing Passport” tool creates a profile for each home using street view images analyzed by ML models. We trained these ML models (using TensorFlow) to detect three building characteristics: whether construction is complete or not, building material (e.g., concrete, brick, painted, etc.), and if the building was designed by an architect. Our Data Team manually outlined these building properties in thousands of images. By showing example after example to our ML algorithm, the model learned to recognize these properties and draw its own bounding boxes (Figure 1). Speed and cost are the key here; once trained, this ML system is capable of processing over 300,000 images in one hour for about 1.5 USD.

Figure 1. Detecting building properties in street view imagery. Sample detections for building completeness (left), design (middle), and construction material (right). Notice that the models work reasonably well even with obstructions (like this bus).

Figure 1. Detecting building properties in street view imagery. Sample detections for building completeness (left), design (middle), and construction material (right). Notice that the models work reasonably well even with obstructions (like this bus).

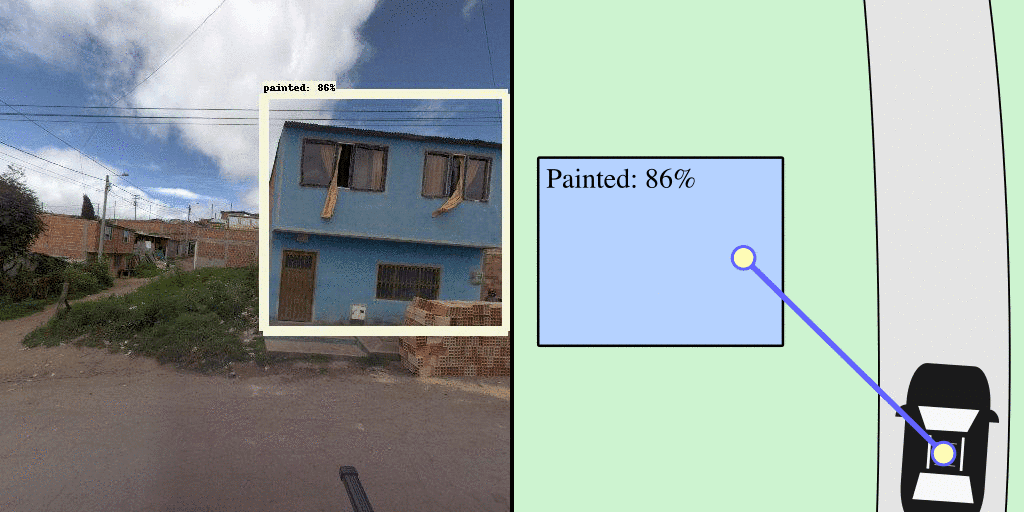

Next, we register these building detections in a building footprint map by combining a few pieces of information. The car’s camera platform captures its GPS location and direction of travel every time it snaps a picture. Since the camera has a known field of view and points in a fixed direction relative to the car, we know each image’s extents (in terms of compass direction). We find the center of each bounding box to determine the exact compass direction of every detected structure; for example, a painted building at 270 degrees. This allows us to draw a ray out from the car’s location in the direction of the detected building (Figure 2, right). Finally, we make the final link between the street view images and our top-down map: we search for the building polygon in our building footprint map that overlaps with each detection ray to assign the detected property (e.g., “material: painted”).

Figure 2. Registering street view detections to the map. The continuous stream of images often leads to several detections per structure (left). Knowing the car’s location, heading, and camera field of view, we create a geospatial line for each bounding box detection originating at the car and pointing outward (right). We assign this detected property (and confidence) to the first building polygon (blue box) intersected.

Figure 2. Registering street view detections to the map. The continuous stream of images often leads to several detections per structure (left). Knowing the car’s location, heading, and camera field of view, we create a geospatial line for each bounding box detection originating at the car and pointing outward (right). We assign this detected property (and confidence) to the first building polygon (blue box) intersected.

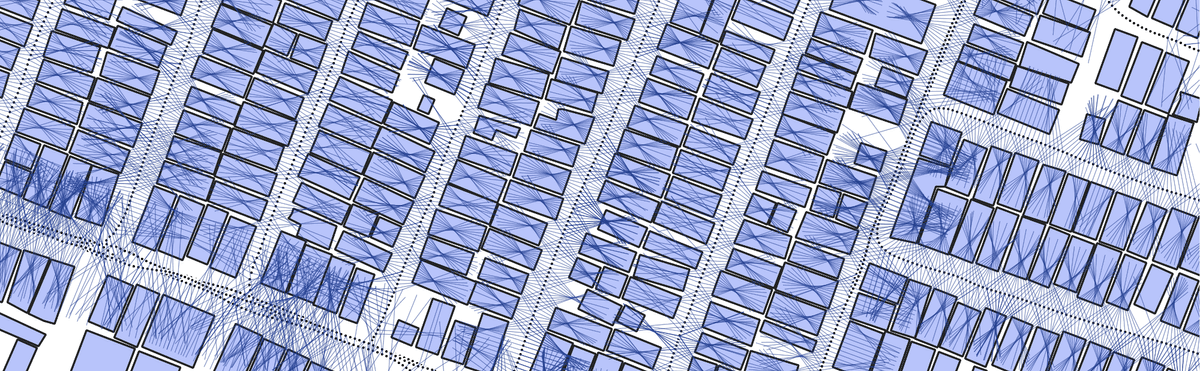

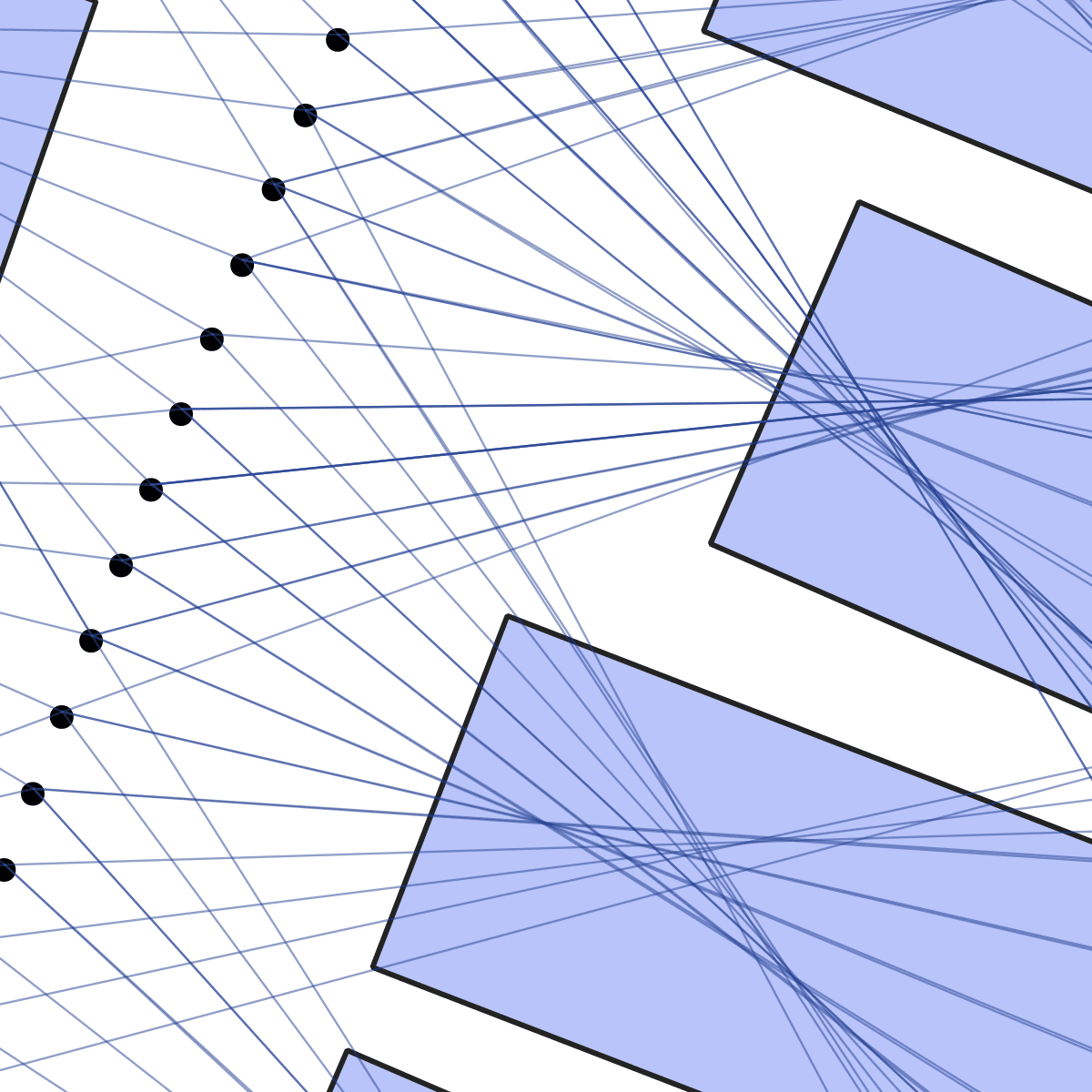

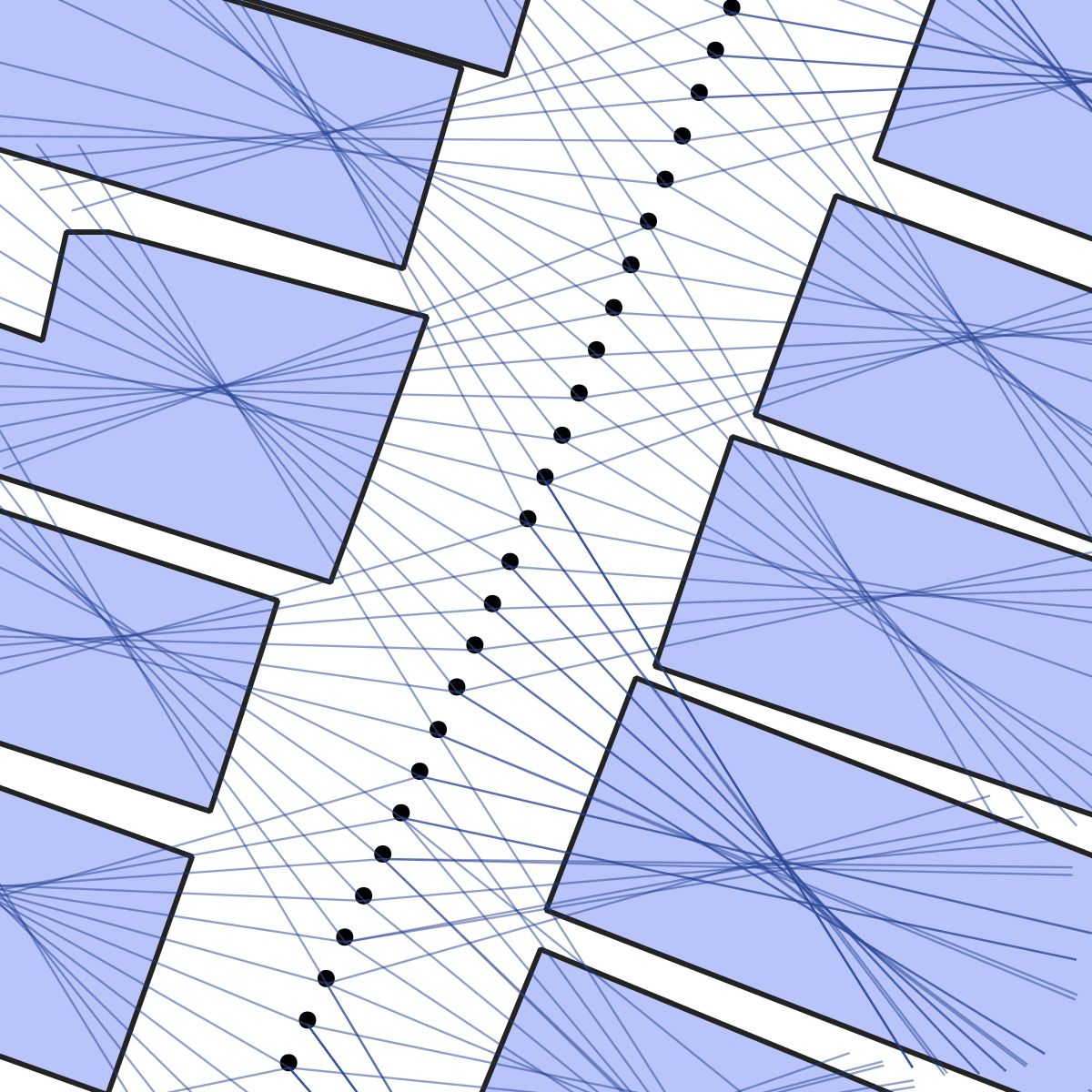

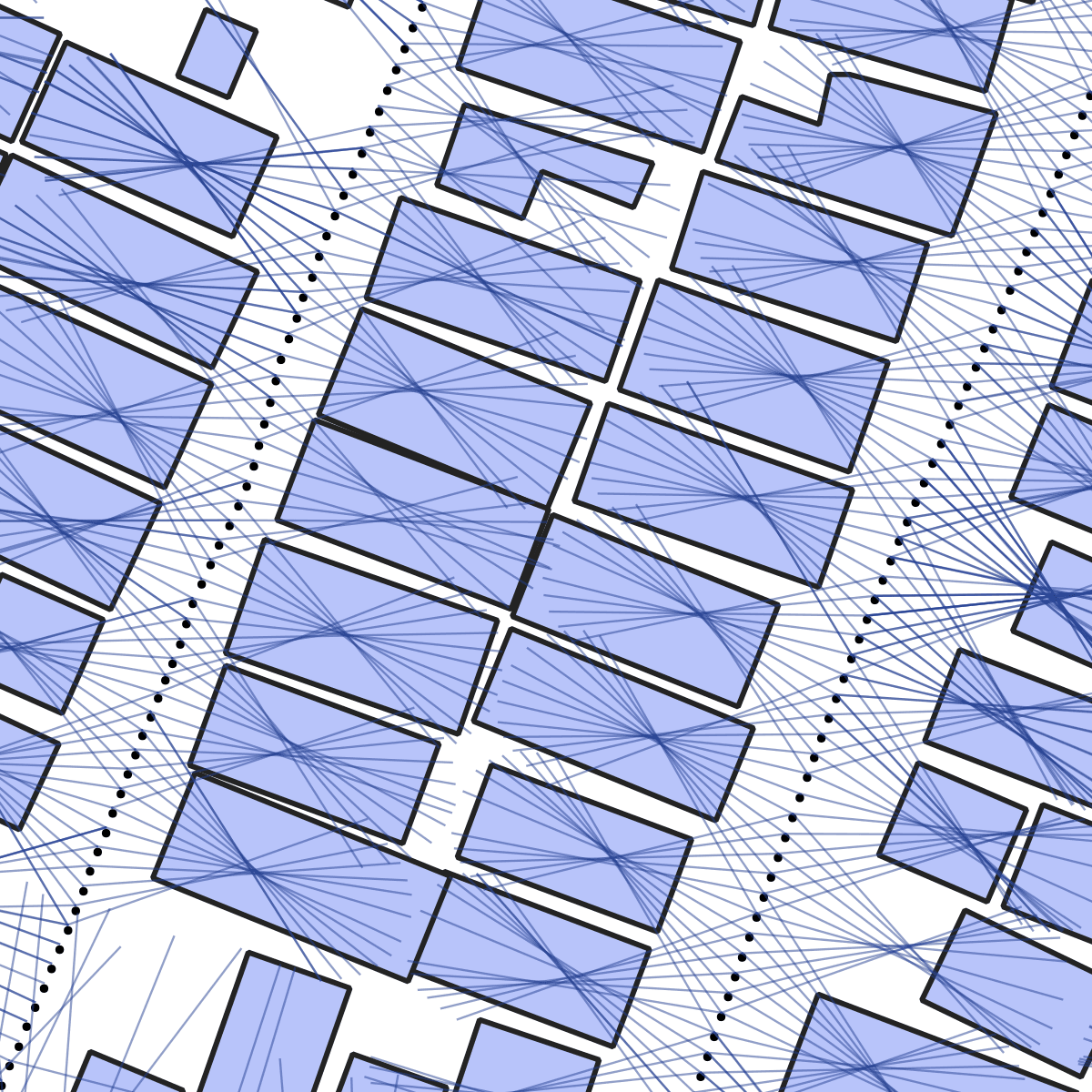

We apply the same logic for entire neighborhoods. The camera records multiple images per meter traveled, which leads to 5–10 detection rays for each building. We combine this information with the building footprint map traced from drone imagery. Mapping the buildings and detection rays together, the scene resembles a magnifying glass focusing rays of light on each building polygon (Figure 3). For every building, we distill these ML detections into one determination per building attribute, similar to an engineer making a single determination after inspecting a building from different angles. Repeating this for each of the three properties, we generate a neighborhood-wide building database that supports our visualization tool and populates the building profiles.

Figure 3. Mapping street view feature detections (dark lines) from image capture locations (black dots) while the car was driving past buildings (blue polygons). We assign detected features to buildings by finding the line/polygon intersection closest to the car position. The overhead building footprint map is derived from drone imagery.

Figure 3. Mapping street view feature detections (dark lines) from image capture locations (black dots) while the car was driving past buildings (blue polygons). We assign detected features to buildings by finding the line/polygon intersection closest to the car position. The overhead building footprint map is derived from drone imagery.

Interacting with the data

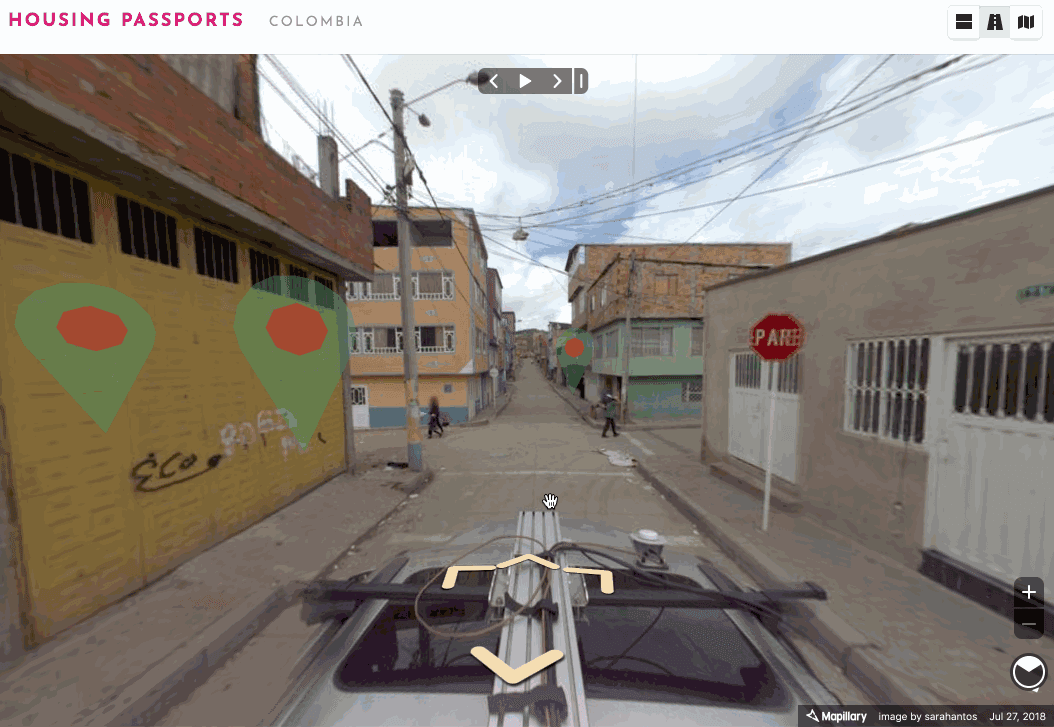

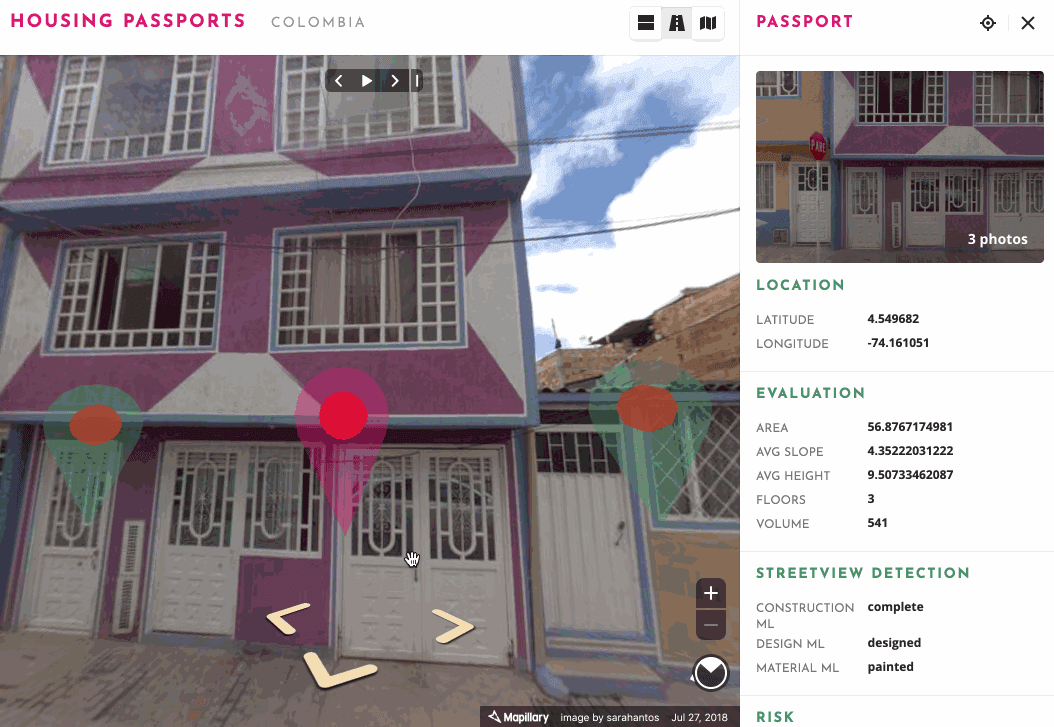

We developed an interactive tool to help decision makers interact with this ML-derived data. Users can navigate in two modes: street view (using Mapillary’s engine) or the building footprint map. Clicking a building in either mode opens a “Housing Passport” pane — an overview that integrates multiple streams of information (Figures 4 and 5). The displayed information includes the three building properties we derived with machine learning as well as other known risks (e.g., from floods or landslides), its location, and static street view images. As we improve this tool, we hope to help local communities and governments pinpoint buildings that need structural attention by surfacing the attribute(s) that make them dangerous.

Figure 4. Our web tool combines street view navigation with ML-derived housing information. The animation shows how to navigate through our pilot region (in Colombia) and open the “Housing Passport” pane containing building information and risks.

Figure 4. Our web tool combines street view navigation with ML-derived housing information. The animation shows how to navigate through our pilot region (in Colombia) and open the “Housing Passport” pane containing building information and risks.

Figure 5. Users can also navigate using a building footprint map. Both the street view and building footprint maps allow users to select and view a building’s Housing Passport.

Figure 5. Users can also navigate using a building footprint map. Both the street view and building footprint maps allow users to select and view a building’s Housing Passport.

Going forward

With this pilot study completed, there are a number of new possibilities to explore. This work was limited to a small neighborhood in Colombia, so we are hoping to expand to new neighborhoods within Colombia as well as a handful of other countries. Second, there are several improvements to make around the Housing Passports pane that we plan to address, including format and organization. Third and finally, we are working to release an anonymized street view dataset for other machine learning researchers. This will contain street view images and annotations for the same building properties we explored here. Once available, we will announce this open ML dataset with a separate blog post.

Additional resources

For more information on the work The World Bank is doing around resilient housing, check out their latest blog posts (here or here) highlighting advances in sustainable cities. Recently, they also produced a podcast discussing the problem with multiple experts and covering innovative ways of addressing the issue.

What we're doing.

Latest