A Bioacoustic Search Platform for Conservation

Using audio and machine learning to monitor wildlife research

Overview

The A2O Bioacoustics Search Platform is an audio similarity search engine built in collaboration with Google Research and the Australian Acoustics Observatory (A2O). It uses machine learning and vector search to monitor threatened or endangered species.

Challenge

A2O has over 2 petabytes of audio data collected by a network of sensors across the continent. This dataset is essential for monitoring wildlife, protecting endangered species, and bioacoustic research. It requires analysis and labeling to support researchers and citizen scientists. Manually analyzing and labeling the data is highly time-consuming and costly.

Outcome

An efficient search platform to find similar bird sounds from the extensive audio database, facilitating research and annotation to further the development of ML models in the bioacoustics field.

Background

Australian Acoustics Observatory operates a network of 400 continuously recording acoustic sensors across the continent. During its five years of operation, A2O has collected over two petabytes of data. These raw audio files, ranging from minutes to hours, are freely available to the public. Most audio files have basic metadata, such as the recording's date, time, and location. However, only a subset of this dataset has been annotated—either by members of the A2O team or the community.

Manual analysis and annotation of this volume of audio data are time consuming and costly. Alternatively, machine learning techniques can automatically identify and label bioacoustic sounds within the dataset. This approach would transform the raw audio files into a structured and useful resource for researchers and low-cost automated systems aimed at ecosystem health monitoring.

Researchers at Google have been developing machine learning models that generate vector embeddings for audio to perform operations like similarity search. Our goal was to operationalize their Perch model and build a tool that allows researchers to use their recordings of known species to identify and track animal recordings in the A2O database. The immediate priority was building an MVP that demonstrates what's possible with acoustic data and similarity search vectors for reliably monitoring animals. The Perch model produces exceptionally high-quality embeddings and can cleverly separate the input audio into different channels of background noise and individual animal sounds.

An Audio Search Platform

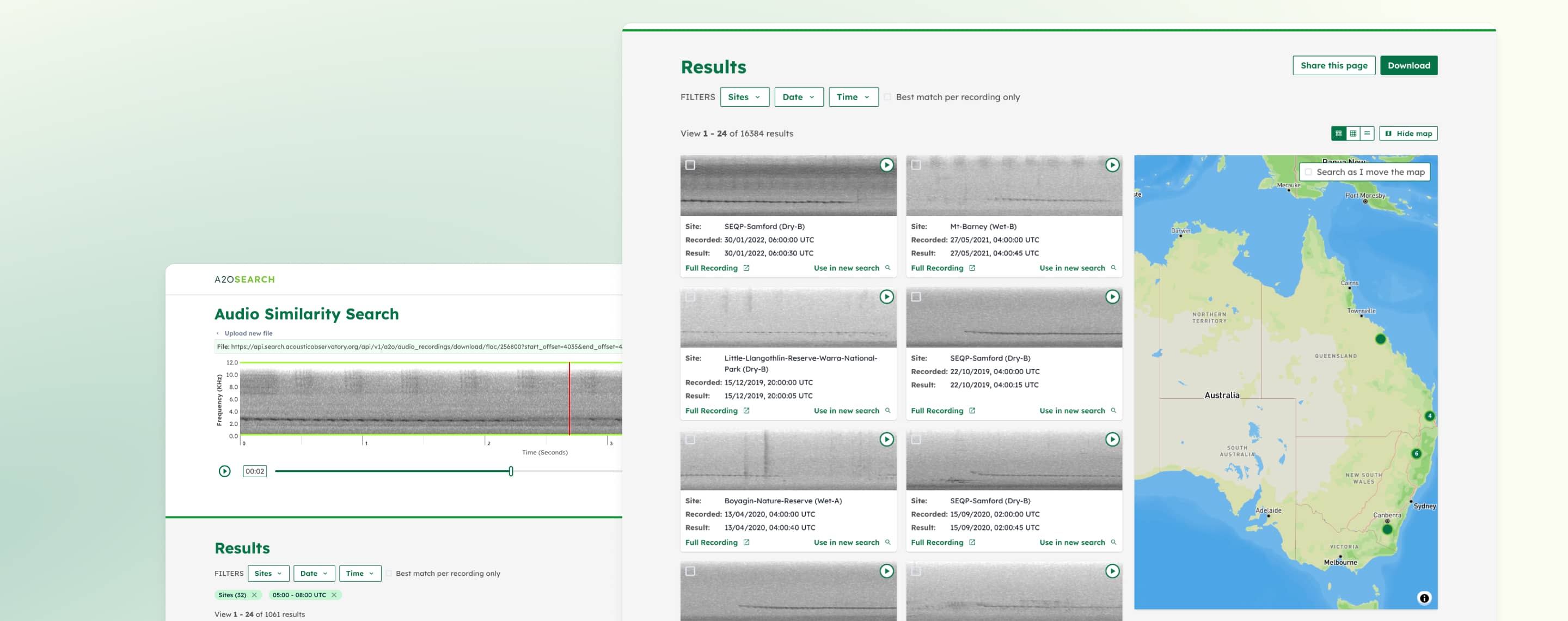

The product vision for the Bioacoustics Search Platform was to facilitate a bioacoustic search-by-example experience where users upload a short clip of a bird sound and get a list of similar bird sounds from the A2O database. To accomplish this, we built a similarity search engine powered by a vector database that stores the representation of A2O's audio files as embeddings. On top of the database, we built an API and a user interface allowing users to upload a short sound recording, which returns a list of similar bird sounds from the A2O database that the user can filter by location and time.

Looking at the distribution of search results based on geography and time of day

Embeddings, Vector Database and Similarity Search

Similarity search is a search technique that allows users to find related items in a large dataset. It’s the same technique used by Shazam to find the song you are listening to or how Spotify recommends music you are more likely to enjoy. The similarity search is performed in a database, often called a vector database. What makes a vector database special is the availability of search algorithms that can run extremely fast over large amounts of data. For this project, we used Milvus as our vector database.

Learn more about how we integrate advanced machine learning and AI to transform complex data into intuitive, actionable insights and design products that accelerate science.

Embeddings are a way to represent data as an n-dimensional vector.

Related content

More for you

Have a challenging project that could use our help?

Let's connect

We'd love to hear from you.