Language Interfaces for Maps

- Estimated

- 6 min read

Combining the power of AI with human insight to make geospatial analysis more accessible and reliable.

The future of geospatial tech speaks your language.

As we continue to generate unprecedented volumes of Earth observation data, we face a crucial challenge: How do we make this wealth of information accessible to those who need it most?

Over the past year, we’ve explored how Large Language Models (LLMs) can bridge this gap, transforming how we interact with geospatial data through natural language.

The Evolution of Spatial Queries: From Technical to Natural

Consider the question: "Show me all the restaurants in south Goa that serve Mediterranean cuisine."

While humans naturally think in these terms, traditional map interfaces require translation into coordinates, geometries, and database queries. This disconnect creates barriers for users who could benefit from spatial analysis but lack technical GIS expertise.

Mapping interfaces today are already quite good at this kind of geographic search, but the challenge extends beyond simple queries. Map users think of regions and relationships rather than precise geographical boundaries when investigating deforestation along the Amazon River or tracking EV charging stations between two locations. Geospatial LLMs address this challenge by enabling natural language understanding of spatial elements - points, lines, and polygons - that form the foundation of geographical analysis.

LLMs as Orchestrators

Many mapping applications are very much focused on data. Reliability and trust are key factors when it comes to data-driven applications. While the creativity of generative AI is a desirable feature in use cases like image generation, it is often a dealbreaker in data-driven applications. When we obtain the outline of a geographic area or ask for the deforestation rate in our state, we need to know exactly where the answers come from.

In these scenarios, relying on an LLM for answers is not enough, even if it is usually correct. For data-driven use cases, “usually” doesn’t suffice - we need consistent, verifiable data every time. Including a link to the original source also helps build trust in the LLM system. This can not be achieved with traditional LLM chatbots that are driven by prompts. Our solution? Delegate specialized tasks to purpose-built tools while letting LLMs serve as intelligent orchestrators.

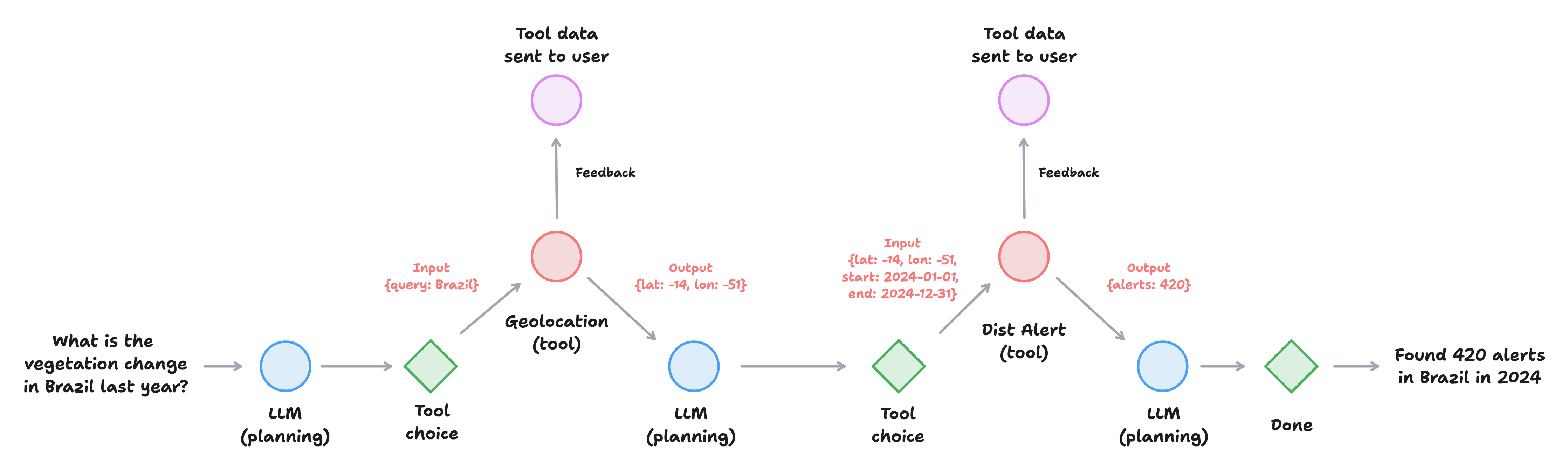

Agentic frameworks can be used to build that kind of LLM-driven applications. They can be used to create intelligent assistants that access tools for analysis. These tools are pieces of code packaged as functions that take well-known inputs and outputs. Inputs are validated before the tool is called, and outputs are explainable and have a clearly identifiable source. In such a setup, one can build agents that never use an LLM to generate any output directly, guaranteeing that there are no hallucinations.

Consider analyzing tree cover loss in Pará, Brazil. We will first need to define the region of Pará in geographic terms. For this, a geolocation tool that calls a known API, such as the OpenCage geocoding API. The input will be “Pará, Brazil,” and the output will be the data coming from a well-known source. There will be no ambiguity when proposing a location for the region because the result can be explained and reproduced. Similarly, the tree cover loss can also be computed from transparent and well-known data sources such as STAC catalogs or Google Earth Engine, and the results are generated transparently.

Use LLMs to orchestrate analysis, let tools carry it out

Simplified workflow diagram for a tool driven agent. The LLM chooses the tools to call, and the output is both processed further and sent to the user as feedback. The data from well known sources and outputs can be reproduced based on the inputs.

Building Trust with Human in the Loop

Another crucial component to building trust in LLM-driven interfaces is human verification. A well-designed interface will inform the user about the tools called by the LLM and allow the user to interrupt the workflow and request changes. If the initially proposed location is not correct, the query can be refined. If the source for the tree cover loss is not the one the user is familiar with, another data source can be requested.

Rather than fully automated workflows, we've found that the most robust solutions combine AI capabilities with human insight. Continuous feedback on what tools were called and a way for the user to drill into the details of every step will create trust and make the output truly actionable.

Developing a common set of generalized tools is another way to increase trust in LLM based systems. Tooling should really be at the heart of geospatial LLM applications. We see a need to build a set of "well known" tools that can be used across different applications. Geocoding is a great example of a tool frequently required in many geo applications. Jason Gilman has created an excellent example of what an LLM-driven geocoding tool could look like. Another example could be tooling to search and retrieve data from STAC catalogs. As a community, we should create libraries of LLM tools like these that can be reused across different applications. This would not only save time but also increase transparency.

From Theory to Practice: The Land and Carbon Lab

As a community, we are at the beginning of this journey. NASA’s and Microsoft’s Earth Copilot is one of the few examples that worked towards such a vision to date. We are currently implementing these principles in collaboration with the Land and Carbon Lab and WRI. We are building a set of prototypes showcasing how trustworthy and reproducible Geo LLM applications look in practice.

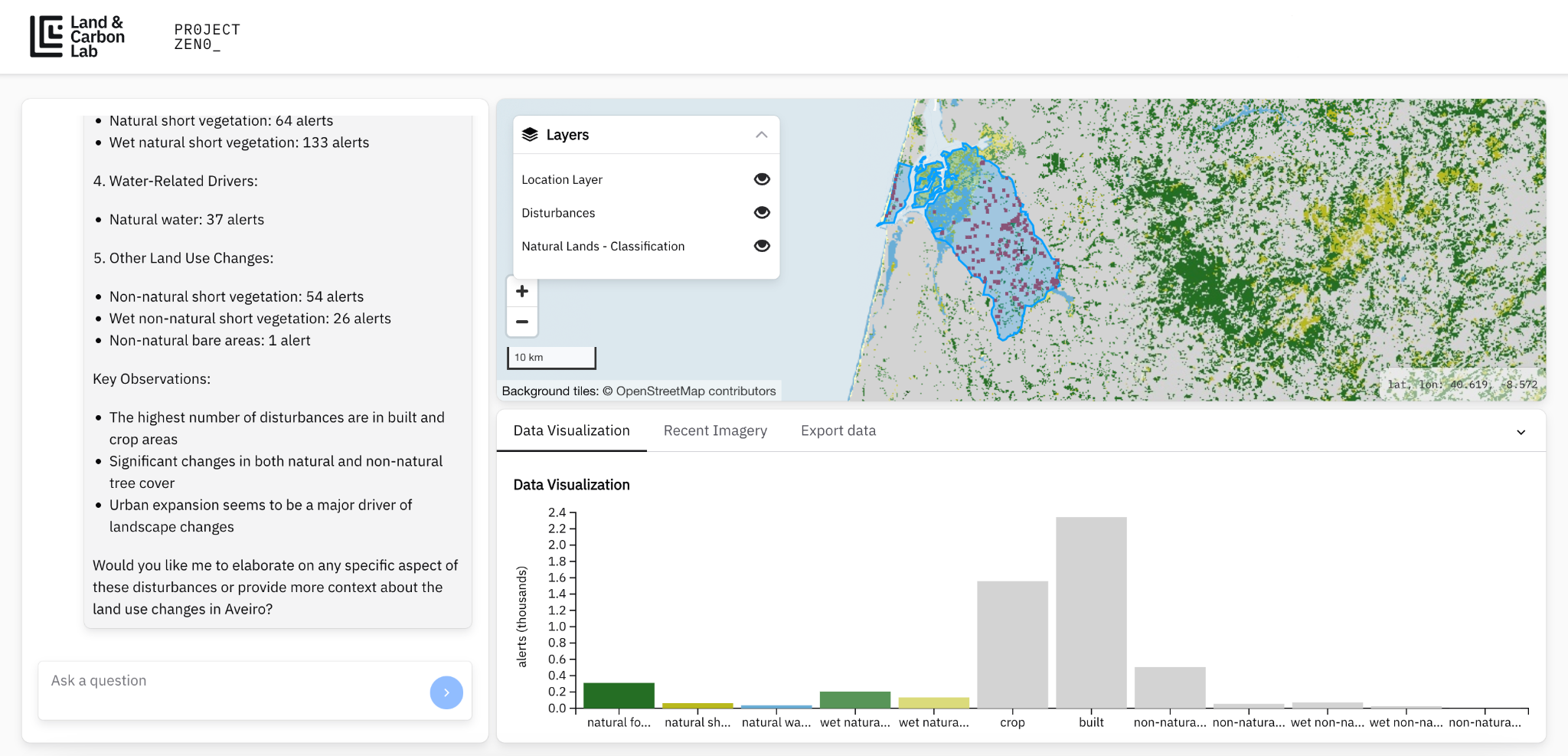

So far, we have built agents that allow searching for locations and compute statistics against UMD/GLAD vegetation disturbance alerts. Several options for summarizing disturbances by land cover and type of possible driver of the disturbances are built in as well. We are also working on connecting these tools and agents through a REST API to build front-end applications.

There's a lot of room for innovation when it comes to interface design and user experience. LLMs can fundamentally change how we interact with maps and data. We hope that these kinds of tools democratize access to geospatial data and make it useful for larger audiences. One example is that multilingual support comes built-in with the state-of-the-art LLM models. This means that the chat interaction will be multilingual almost by default, making these tools more accessible for people on the ground who are not geospatial experts.

In this project, a cross-functional team uses fast development cycles to test these ideas and new concepts in practice. This work is very much in progress and will evolve over the coming months, and we are excited to walk the talk in collaboration with our excellent partner, Land and Carbon Lab.

Screenshot of the LLM driven mapping application prototype we are developing in collaboration with the Land and Carbon Lab.

Future work

We’re optimistic about LLMs' potential toward more intuitive geospatial interfaces. As we look to the future, we have our eye on several critical threads. Generalizing some of the approaches to geo-enabled LLM applications is a challenge. Agentic frameworks are evolving fast, and the LLM capabilities are ever-evolving. Navigating across multiple model backends and finding the balance between local and proprietary models are important components for building efficient and transparent applications. And as these tools evolve, it will be interesting to see how interfaces change. We see LLM-driven geo applications as a paradigm shift in how to think about mapping interfaces.

The future of geospatial analysis lies not just in powerful tools but in making those tools truly accessible through natural language interfaces. We're working to make that future a reality through careful integration of LLMs, specialized tools, and human insight.

Want to explore these concepts further or collaborate on geospatial language interfaces? Get in touch or check out our open-source projects on GitHub.

What we're doing.

Latest