Open Access to Data and Code#

Ensuring open access to both data and code is pivotal in fostering transparency, reproducibility, and collaboration in scientific research. Doing so allows other researchers to scrutinize, verify, and build upon existing findings, thereby reinforcing the credibility of scientific discoveries. When both are made freely available, the scientific community may collaboratively assess the robustness of studies, identify potential biases, and replicate experiments, thereby upholding the integrity of the research process. As well, this mode of development and publication not only works to help validate findings but also enables identification of any discrepancies or errors, thereby contributing to the refinement and advancement of scientific knowledge.

By removing barriers to access, interdisciplinary cooperation is encouraged and experts from diverse fields are allowed to leverage existing datasets and methodologies in their own research endeavors, which reduces redundancies and accelerates scientific progress. Collaborative efforts are fueled by shared resources and lead to greater and faster innovation.

Below, we’ll discuss some important protocols and recommendations for ensuring the effective implementation of open access to data and code, in hopes that these learnings will help encourage a culture of transparency, reproducibility, and collaboration in scientific research, ultimately enhancing the quality and impact of scientific discoveries.

Using Common Platforms for Development, Sharing, and Collaboration#

Sharing code on GitHub:

GitHub is a popular platform for publishing code and facilitating collaborative development.

GitHub repositories are used to host code, scripts, and related documentation.

GitHub allows researchers to commit changes regularly to track the evolution of a codebase over time.

In GitHub, developers work from branches for new features or experimental changes so as not to affect the main codebase.

GitHub’s issue tracking and project management features can be used to coordinate tasks and communicate with collaborators.

Sharing data on GitHub:

Occasionally, you might want to store small-sized datasets directly within a GitHub repository (e.g. for unit tests, which we will talk about further down).

NOTE: Sharing data on GitHub is rare and should be done only for very small datasets and specific purposes. We discuss more methods for sharing datasets publicly in the lesson “Open Formats and Tools”

For large datasets, consider providing download links to external storage platforms like Amazon S3 or Google Cloud Storage.

Include info in a README file (discussed in more detail further down) in your repository that provides information about the dataset, its format, and any preprocessing steps required.

Using Hugging Face for model and dataset sharing:

Hugging Face is a platform that specializes in hosting and sharing machine learning models and datasets.

Use Hugging Face’s datasets library to upload and share your datasets with the community.

Similarly, you can use the model hub to host and share trained models, along with associated code for implementation.

Choosing Open-Access Licenses:

Selecting the appropriate open-access license is essential for defining how others can use, modify, and redistribute your code and data.

Common open-access licenses include MIT License, Apache License, GNU General Public License (GPL), and Creative Commons licenses.

Include a LICENSE file in your repository that clearly specifies the terms and conditions under which your code and data can be used.

Documenting Usage and Citation:

Provide clear instructions on how others can use your code and data, including installation steps, dependencies, and examples.

Encourage users to cite your work properly by providing citation information and DOI (Digital Object Identifier) links where applicable.

You can create DOIs for GitHub repositories via Zenodo. To do so, you will need either an ORCID, GitHub, or institutional login. The process involves specifying some metadata about your repository, such as title, description, authors, and keywords. You can also update the version of a repository as well.

Include a CONTRIBUTING.md file (also discussed in greater detail farther below) in your repository that outlines guidelines for contributing to and citing your project.

Preparing Data and Code for Sharing#

Let’s talk about how to organize your data and code in a clear and well-documented structure. Modern best practices for organizing Python code focus on improving readability, maintainability, scalability, and collaboration among developers. The following are some good practice recommendations.

1. Follow PEP 8 guidelines:

Adhere to the Python Enhancement Proposal (PEP) 8 style guide for consistent and readable code formatting.

Use tools like flake8 or pylint to automatically check code adherence to PEP 8 standards.

2. Use descriptive naming:

Choose meaningful and descriptive names for variables, functions, classes, and modules to enhance code clarity.

Follow the

snake_casenaming convention for variables and functions,CapWordsfor class names, andALL_CAPSfor constants.Use nouns for variable names and verbs combined with nouns for functions.

3. Organize code into modules and packages:

Break code into logical functions, modules and packages to group related functionality together.

Avoid cluttering the main script by moving reusable code into separate functions and modules.

Create a clear folder structure and use packages to organize related modules into hierarchical namespaces.

4. Separation of concerns:

Follow the principle of separation of concerns by separating different aspects of functionality into distinct modules or classes.

Divide code into functional parts (e.g., data pre-processing module, data loading module, model architecture module) to improve maintainability and scalability.

5. Use docstrings and comments where necessary:

Include descriptive docstrings for modules, classes, functions, and methods to document their purpose, parameters, return values, and usage examples.

Use tools like Sphinx to generate documentation from docstrings automatically.

Commenting code is also advisable when the code is very compact or hard to parse intuitively.

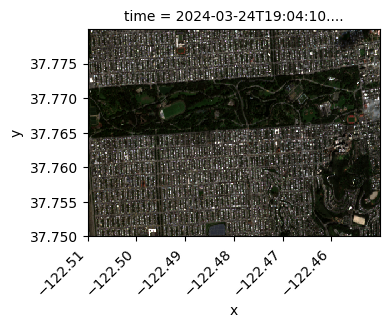

In the next cell, you’ll find an example of using docstrings within a function to describe its purpose and that of the arguments required to execute it. Don’t worry about understanding everything going on in the cell yet. Just notice how we can easily understand the role of the function. It’s also worth noting that the code is well commented and abides by the naming conventions mentioned above.

from datetime import datetime, timedelta, timezone

import warnings

from shapely.geometry import box

import matplotlib.pyplot as plt

import pystac_client

import stackstac

import xarray as xr

warnings.filterwarnings('ignore')

STAC_URL = "https://earth-search.aws.element84.com/v1" # AWS public STAC catalog

COLLECTION = "sentinel-2-l2a" # Sentinel-2 collection id

BBOX = [-122.51, 37.75, -122.45, 37.78] # Bounding box for a subset of San Francisco

START_DATE = datetime.now(timezone.utc) - timedelta(weeks=4)

END_DATE = datetime.now(timezone.utc)

MAX_CLOUD_COVER = 80 # In percentage of pixels

BANDS = ["red", "green", "blue", "nir"] # Specific bands we want to use from Sentinel-2

EPSG = 4326

def read_image_from_stac(

stac_url: str,

collection: str,

bbox: list,

start_date: datetime,

end_date: datetime,

bands: list,

max_cloud_cover: int,

epsg: int

) -> xr.DataArray:

"""

Read the best image from within a temporal range, based on cloud coverage,

within the specified bounding box from a STAC catalog.

Parameters:

stac_url (str): The URL of the STAC catalog.

collection (str): Name of the collection to search within the STAC catalog.

bbox (list): Bounding box coordinates [west, south, east, north].

start_date (datetime): Start date for temporal filtering.

end_date (datetime): End date for temporal filtering.

bands (list): List of bands to retrieve (e.g., ["red", "green", "blue"]).

max_cloud_cover (int): Maximum cloud cover percentage allowed.

epsg (int): EPSG code for the coordinate reference system.

Returns:

xr.DataArray: The image as an xarray DataArray.

Raises:

ValueError: If the request to the STAC API fails or if no suitable items are found.

"""

# Define area of interest (AOI)

area_of_interest = box(*bbox)

# Connect to the STAC catalog

catalog = pystac_client.Client.open(stac_url)

# Search for items within the specified date range and AOI

search = catalog.search(

collections=[collection],

datetime=(start_date, end_date),

intersects=area_of_interest,

max_items=100,

query={"eo:cloud_cover": {"lt": max_cloud_cover}},

)

items = search.item_collection()

if not items:

raise ValueError("No suitable items found in the STAC catalog")

# Choose the last item from the search results

item = items[-1]

# Stack the assets (e.g., red, green, blue bands) into an xarray DataArray

stack = stackstac.stack(

item,

bounds=bbox,

snap_bounds=False,

dtype="float32",

epsg=epsg,

rescale=False,

fill_value=0,

assets=bands,

)

return stack.compute()

# Example usage:

try:

sentinel_image = read_image_from_stac(

STAC_URL, COLLECTION, BBOX, START_DATE, END_DATE, BANDS, MAX_CLOUD_COVER, EPSG

)

print("Sentinel-2 image loaded successfully")

# Plot the true color image

plot = sentinel_image.sel(band=["red", "green", "blue"]).plot.imshow(

row="time", rgb="band", vmin=0, vmax=5000

)

# Rotate x-axis labels diagonally

plt.xticks(rotation=45, ha='right')

plt.show()

except ValueError as ve:

print("Error:", ve)

Sentinel-2 image loaded successfully

6. Apply DRY (Don’t Repeat Yourself) principle:

Avoid code duplication by extracting common functionality into reusable functions, classes, or modules.

Encapsulate repetitive patterns into helper functions or utility modules to promote code reuse.

In general, we want to avoid re-writing a lot of boiler-plate code and instead abstract that to re-usable/re-purposable modules and/or use libraries that have already abstracted away a lot of low-level functionality. A good example of this for those who program in Python and use satellite imagery is rasterio. This open source library abstracts away a lot of low-level image processing functionality into concise and wide-purpose functions that apply across many downstream applications.

7. Follow Single Responsibility Principle (SRP):

Try to ensure that each module, class, or function has a single responsibility or purpose.

Refactor complex functions or classes into smaller, focused units of functionality to improve readability and maintainability.

8. Use configuration files:

Using configuration files to centralize parameters and constants is a common and effective practice in software development. It allows you to separate configuration details from code logic, making it easier to manage and update experiments or workflows without modifying the code itself.

Choose a configuration file format:

Common formats include JSON, YAML, INI, and TOML. Choose the format that best suits your project’s requirements and preferences.

JSON (JavaScript Object Notation) is a lightweight data interchange format that is often used for configuration files due to its simplicity and widespread support.

YAML is a human-readable data serialization format that is commonly used for configuration files.

An INI file, short for “Initialization file,” is a simple and widely used format for storing settings and configuration data, particularly on Windows systems. INI files typically consist of plain text files with a hierarchical structure, organized into sections and key-value pairs.

TOML (Tom’s Obvious, Minimal Language) is a configuration file format that aims to be easy to read due to its minimal syntax.

Define configuration parameters:

Identify the parameters in your code that are subject to change or customization, such as file paths, API endpoints, database connection details, argument flags, hyperparameters, logging settings, etc.

Create a config file:

Create a dedicated configuration file (e.g.,

config.json,config.yaml) to store the configuration parameters.Organize parameters hierarchically if needed, especially for complex configurations.

An example in YAML format for a simple image classification experiment could be like the following:

model: type: CNNClassifier num_classes: 10 pretrained: true backbone: resnet18 train: dataloader: batch_size: 64 shuffle: true num_workers: 4 optimizer: type: Adam lr: 0.001 weight_decay: 0.0001 scheduler: type: StepLR step_size: 7 gamma: 0.1 epochs: 20 checkpoint_path: checkpoints/ log_interval: 100 test: dataloader: batch_size: 64 num_workers: 4 checkpoint: checkpoints/best_model.pth

Load configuration in code:

Write code to load the configuration file and parse its contents into a data structure (e.g., dictionary, object) that can be accessed programmatically.

This is an area where boiler-plate code can and should be reduced. There are many libraries that help in parsing and loading configuration files.

Following are a few examples of configuration parsers available for Python. The choice of parser depends on factors such as the complexity of your configuration data, the desired syntax, and any specific features or requirements of your project:

ConfigParser (built-in): ConfigParser is a basic configuration file parser included in Python’s standard library. It provides a simple syntax for configuring key-value pairs in an INI-style format. While it’s lightweight and easy to use for simple configurations, it lacks some advanced features found in other libraries.

ConfigObj: ConfigObj is a Python library for parsing and manipulating configuration files in an INI-style format. It extends the functionality of Python’s built-in ConfigParser module by adding support for nested sections, comments, and interpolation. ConfigObj is particularly well-suited for complex configuration structures.

TomlKit: TomlKit is a Python library for parsing TOML configuration files. It offers a straightforward API for reading and writing TOML files and supports various data types, including strings, integers, floats, arrays, and nested tables.

PyYAML: PyYAML is a Python library for parsing and serializing YAML data. It provides a flexible and expressive syntax for defining configuration settings, including support for nested structures and comments. PyYAML is widely used and integrates well with Python projects.

JSON: Python’s built-in

jsonmodule provides functions for parsing and serializing JSON data. While JSON lacks some features found in other configuration formats, it is easy to understand and work with, especially for developers familiar with JavaScript.

9. Clean and anonymize data, removing any sensitive or personally identifiable information:

Remove API keys, passwords or other forms of information that should not be shared. Use configurable arguments that one would supply at runtime instead.

10. Work from branches:

Working from change-specific branches in GitHub instead of directly in the main branch is important for several reasons:

Isolation of changes: Branches allow developers to isolate their changes from the main codebase until they are ready to be merged. This prevents incomplete or experimental (“work-in-progress” or “draft”) code from affecting the stability of the main branch.

Collaboration: Branches facilitate collaboration among team members by enabling them to work on different features or fixes simultaneously without interfering with each other’s work. Each developer can have their own branch to work on a specific task.

Code review: By working on branches, developers can create pull requests (PRs) to propose changes to the main branch. PRs offer an opportunity for code review, where team members can provide feedback, suggestions, and identify potential issues before merging the changes into the main branch.

Version control (more on this below): Branches serve as a way to manage different versions or variants of the codebase. They allow developers to experiment with new features or bug fixes without affecting the main branch, and can be easily switched between to work on different tasks.

12. Use version control:

Utilize version control systems like Git to track changes, collaborate with other developers, and manage code history.

Follow branching and merging strategies (e.g., GitFlow) to organize development workflow and facilitate collaboration.

Include configuration files in version control to track changes and ensure consistency across development environments.

Document the purpose and usage of each configuration parameter within the config file or accompanying documentation.

13. Use .gitignore files to block certain files from being committed:

The .gitignore file is used by Git to determine which files and directories should be ignored when tracking changes in a repository. It allows developers to specify files or patterns of files that Git should not include in version control. The primary purpose of .gitignore is to exclude files and directories that are not intended to be versioned or shared with others. This includes temporary files, build artifacts, system files, and sensitive data.

By ignoring unnecessary files, .gitignore helps keep the repository clean and focused on the source code and essential project files. This makes it easier for developers to navigate the repository and reduces clutter. Without a .gitignore file, it’s easy for developers to inadvertently add unwanted files to the repository, especially when using commands like git add . to stage changes. .gitignore helps prevent accidental inclusion of such files.

When working in teams, different developers may have different development environments and tools that generate additional files. By using a shared .gitignore file, teams can ensure consistency across environments and avoid conflicts caused by differences in ignored files. In that regard, a nice thing about .gitignore files is their customizability to suit the specific needs of the project and development environment. Developers can specify file names, file extensions, directories, or even use wildcard patterns to match multiple files.

Lastly, Git allows for both global and local .gitignore files. Global .gitignore files apply to all repositories on a system and are useful for excluding common files or patterns across multiple projects. Local .gitignore files are specific to a single repository and can override or supplement global rules.

14. Contribute quality pull requests:

To consolidate the changes made in a dedicated change-specific branch to the main branch, it is best practice to submit a quality pull request (PR) such that other collaborators can vet the changes and ensure they exhibit expected behavior and don’t introduce any breaking patterns.

To make a good pull request in GitHub, consider the following best practices:

Create a descriptive title: The title should accurately summarize the purpose of the PR, making it easy for reviewers to understand what changes are being proposed.

Provide a clear description: Write a detailed description of the changes included in the PR, explaining the problem being addressed and the solution implemented. Include any relevant context, background information, or dependencies.

Include related issues or pull requests: If the PR is related to specific issues or other PRs, reference them in the description using GitHub’s linking syntax (e.g.,

#issue_numberorGH-PR-number).Break down large changes: If the PR includes a significant number of changes or multiple unrelated changes, consider breaking it down into smaller, more manageable PRs. This makes the review process easier and allows for better focus on individual changes.

Follow coding standards and conventions: Ensure that the code adheres to the project’s coding standards, style guidelines, and best practices. Consistency in formatting, naming conventions, and code organization is important for readability and maintainability.

Include tests: If applicable, include tests to verify the correctness and functionality of the changes. Test coverage helps ensure that the code behaves as expected and reduces the risk of introducing regressions.

Review your own changes: Before submitting the PR, review your own changes to check for errors, typos, or any unintended side effects. This helps streamline the review process and demonstrates attention to detail.

Solicit feedback: If you’re unsure about any aspect of the changes, don’t hesitate to ask for feedback from colleagues or maintainers. Collaboration and communication are key to producing high-quality code.

15. Write unit tests:

Implement unit tests using frameworks like unittest or pytest to verify the correctness of individual components and prevent regressions.

Aim for high test coverage to ensure comprehensive testing of code functionality.

16. Use virtual environments:

Create isolated virtual environments using tools like virtualenv or conda to manage project dependencies and avoid conflicts between packages.

17. Document dependencies:

Document project dependencies explicitly by maintaining a

pyproject.toml,requirements.txt, orenvironment.ymlfile to specify project or package versions.Use dependency management tools like pip or conda to install and manage dependencies consistently.

18. Automate testing and CI/CD:

Set up automated testing pipelines using continuous integration (CI) tools like GitHub Actions to run tests automatically on code changes.

Implement continuous delivery/deployment (CD) pipelines to automate the process of building, testing, and deploying code changes to production environments.

19. Code reviews and peer feedback:

Conduct regular PR reviews to provide feedback, identify potential issues, and ensure adherence to coding standards and best practices.

Encourage collaboration and knowledge sharing among team members through constructive peer reviews.