Metodology and Results

Methods

AIAIA Classifier

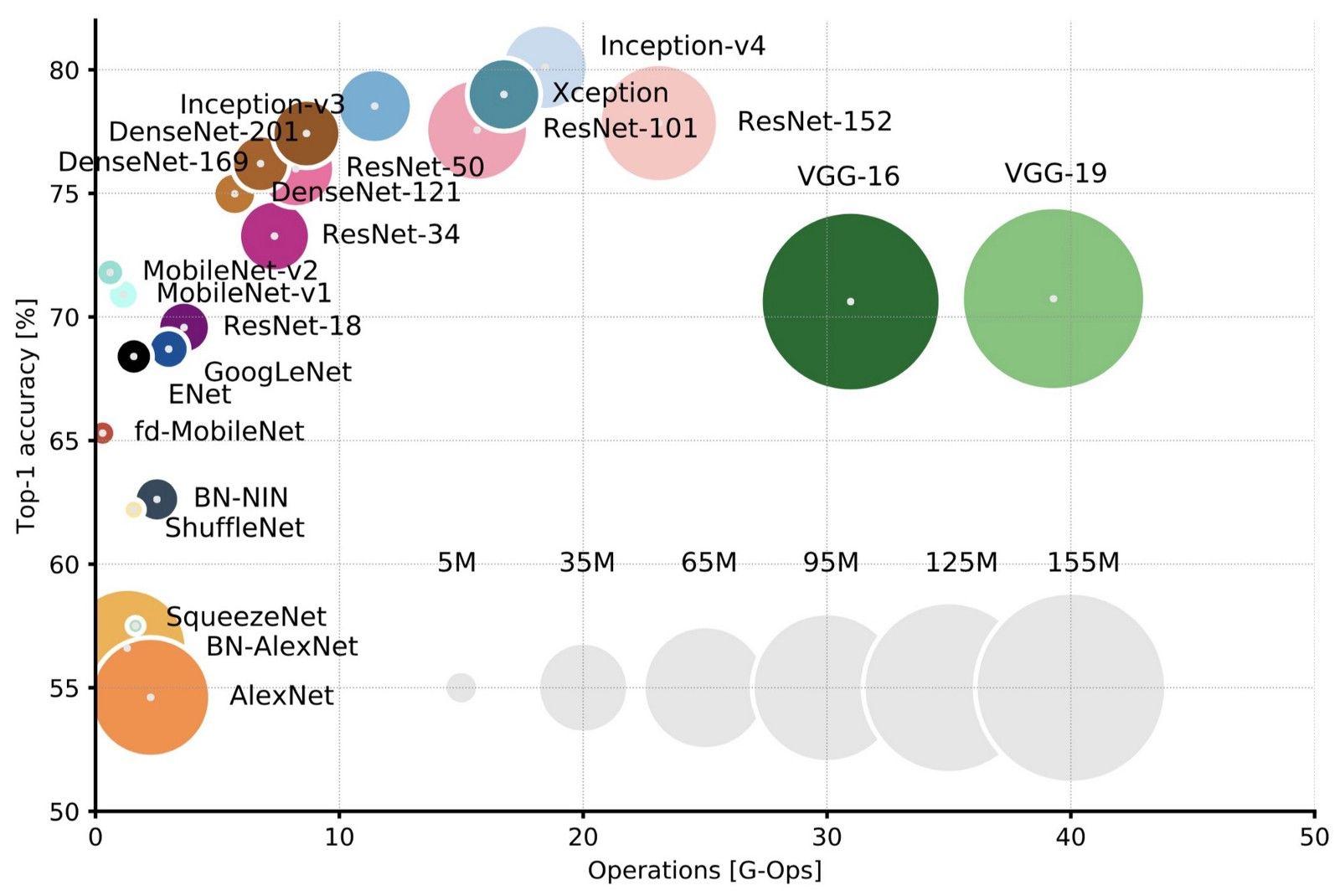

The backbone of the AIAIA Classifier is one of the top state of the art convolutional neural networks (CNN), Xception (Chollet 2016)3 (Figure 10). Xception is a CNN architecture and pre-trained on top of ImageNet. It’s a high performing and efficient network compared to other pre-trained networks. The model script is written in Keras, a high-level python package that uses Google's Tensorflow library as a backend.

AIAIA Classifier accepts as input image chips in TFRecord format, with each chip labeled yes or no (or 1 or 0)4. Image chips labeled with “Yes”, or "contains objects", either have wildlife, human activities or livestock or their combinations. “No”, or “Not-object”, chips are the “empty” images without any objects of interest. The TFRecords have 7000 “Object” and 7000 “Not-object” image chips, that have been split into 70:20:10 proportions as “train”, “validation” and “test” dataset. The models were trained with Sigmoid Focal Loss5. Focal loss is extremely useful for classification when there is heavy class imbalance. In our case, there were many more pixels that did not belong to objects than those that did. There was also substantial class imbalance between object classes in all three object detection models.

The AIAIA Classifier model scripts were containerized and registried on Azure ARC (GCR). We then deployed Kubeflow to the cloud environment, once it’s up running on AKS (or GKE), TFJob model experiments can be deployed to GPU machines on AKS to start the model training (Figure 9). Model evaluation is performed to select the best performing trained models from multiple experiments.

AIAIA Detectors

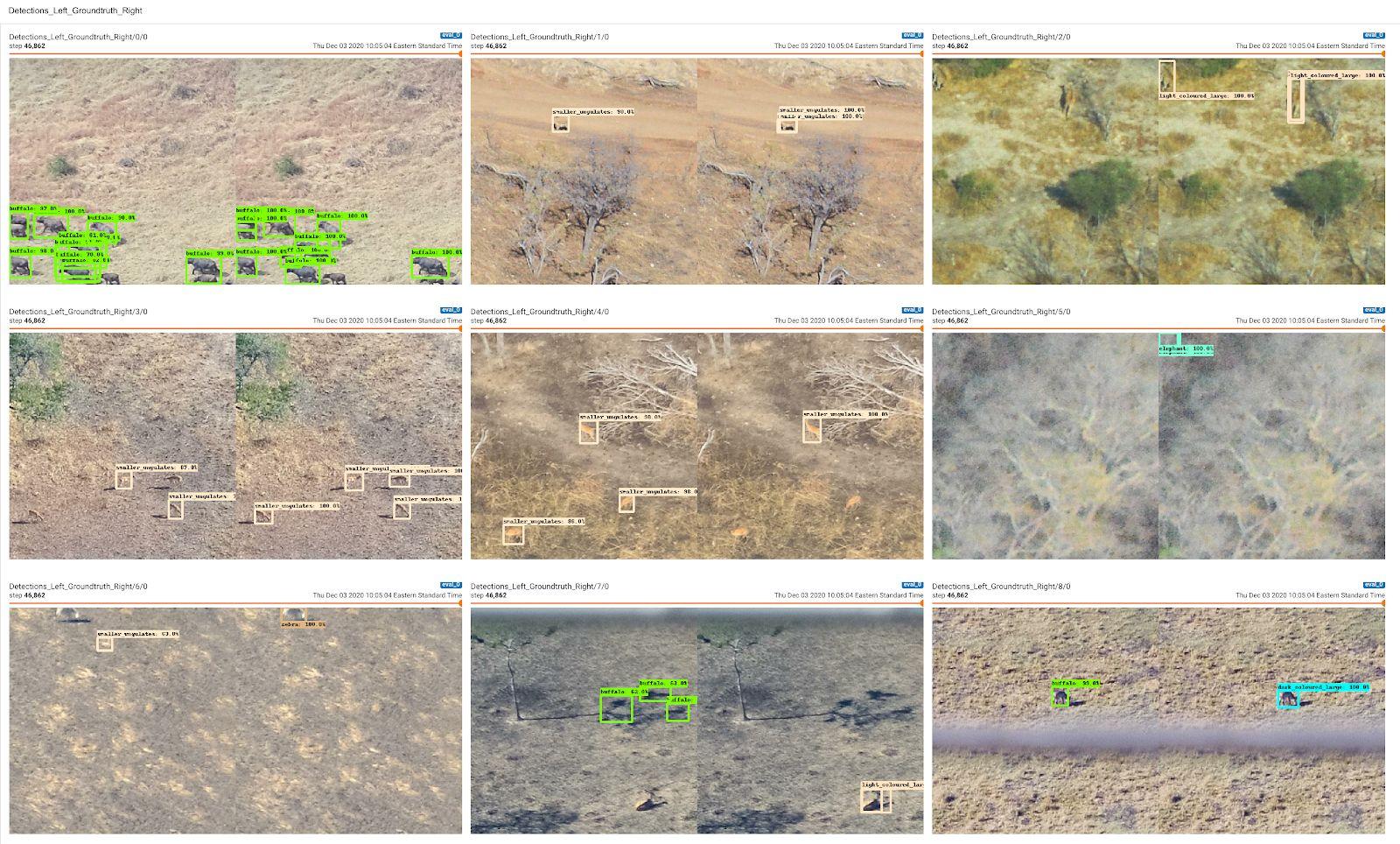

We used TensorFlow’s Object Detection API to train object detection models for this task. Object detection models take an image as input and generate bounding boxes, predicted classes, and confidence scores for each prediction. Using the TFRecords training data, we trained a model of wildlife, human activities and livestock on GCP and Azure with Kubeflow (Figure 7). The Kubeflow is a tool that makes ML workflows on Kubernetes to be deployed easier, simpler, portable and scalable.

The final AIAIA Detectors are designed to predict: 1) Nine different classes of wildlife species and their counts; 2) Five classes of human activities and their counts; 3) three classes of livestock and their counts in Tanzania. For training classes and its count, see Figure 5. The backbone model of the detector we used is Faster RCNN ResNet1016 that pre-trained with Snapshot Serengeti Dataset7. Snapshot Serengeti Dataset contains approximately 2.56 million sequences of camera trap images, totaling 7.1 million images from Snapshot Serengeti project8. The model was scripted and trained with the Tensorflow 1.15 Object Detection API. Before we adopted Faster RCNN ResNet 101, we tried SSD MobileNet, ResNet 50 and ResNet 101. These models did not converge. Model training sessions were observed using TensorBoard (Figure 11).

__________________________________

3 "Xception: Deep Learning with Depthwise Separable ...." https://arxiv.org/abs/1610.02357. Accessed 27 Jan. 2021.4 "TFRecord and tf.train.Example | TensorFlow Core." 19 Sep. 2020, https://www.tensorflow.org/tutorials/load_data/tfrecord. Accessed 27 Jan. 2021.

5 "tfa.losses.sigmoid_focal_crossentropy | TensorFlow Addons." https://www.tensorflow.org/addons/api_docs/python/tfa/losses/sigmoid_focal_crossentropy. Accessed 27 Jan. 2021.

6 "models/tf1_detection_zoo.md at master · tensorflow/models · GitHub." https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/tf1_detection_zoo.md. Accessed 28 Jan. 2021.

7 "Snapshot Serengeti - LILA BC." 24 Jun. 2019, http://lila.science/datasets/snapshot-serengeti. Accessed 28 Jan. 2021.

8 "Snapshot Serengeti — Zooniverse." https://www.snapshotserengeti.org/. Accessed 28 Jan. 2021.